Difference between revisions of "KVM"

(→Manage networks) |

(→Volumes of VM) |

||

| Line 282: | Line 282: | ||

===Volumes of VM=== | ===Volumes of VM=== | ||

| − | A virsh sajnos nem biztosít semmilyen eszközt arra, hogy a kilistázza hogy melyik volume-ot melyik VM használja. Ráadásul azok a volume-okat, amik nincsenek pool-ban lehet hogy semmilyen módon nem mutatja meg. A | + | A virsh sajnos nem biztosít semmilyen eszközt arra, hogy a kilistázza hogy melyik volume-ot melyik VM használja. Ráadásul azok a volume-okat, amik nincsenek pool-ban lehet hogy semmilyen módon nem mutatja meg. A '''Virtual Machine Manager''' a pool-volume listában meg tudja mutatni, hogy melyik machine használja a volume-ot: |

| + | :[[File:ClipCapIt-180715-113851.PNG]] | ||

| + | <br> | ||

| + | <br> | ||

| + | De van rá mód, hogy a parancssorból mi is kiderítsük ezt. Ahogy azt már láthattuk, az VM információk a '''/etc/libvirt/qemu''' mappában található XML fájlokban vannak tárolva. Ebben a fájlban szemmel is megkereshetjük a felcsatolt volume-okat, vagy egy XLST transzformációval is kinyerhetjük a kívánt sorokat a xsltproc program segítségével. | ||

| − | |||

| − | xsltproc is a command line tool for applying XSLT stylesheets to XML documents | + | {{note|xsltproc is a command line tool for applying XSLT stylesheets to XML documents}} |

| − | + | Az alábbi XLST stíluslapra lesz szükségünk: '''guest_storage_list.xsl''' | |

| − | |||

| − | guest_storage_list.xsl | ||

<pre> | <pre> | ||

<?xml version="1.0" encoding="UTF-8"?> | <?xml version="1.0" encoding="UTF-8"?> | ||

| Line 307: | Line 308: | ||

| − | + | Majd '''xsltproc''' programmal a kívánt VM konfigurációs fájljára lefuttatjuk: | |

| − | |||

| − | |||

<pre> | <pre> | ||

# xsltproc guest_storage_info.xsl /etc/libvirt/qemu/mg0.xml | # xsltproc guest_storage_info.xsl /etc/libvirt/qemu/mg0.xml | ||

/root/.docker/machine/machines/mg0/mg0.img | /root/.docker/machine/machines/mg0/mg0.img | ||

/root/.docker/machine/machines/mg0/boot2docker.iso | /root/.docker/machine/machines/mg0/boot2docker.iso | ||

| − | |||

</pre> | </pre> | ||

| + | Kilistázta a virtuális merevlemezt és az installációra használt iso-t. Ezeket a '''Virtual Machine Manager'''-ben is láthatjuk: | ||

:[[File:ClipCapIt-180715-012236.PNG]] | :[[File:ClipCapIt-180715-012236.PNG]] | ||

| − | + | A qemu-img paranccsal le lehet kérdeni egy adott volume részleteit: | |

<pre> | <pre> | ||

# qemu-img info /root/.docker/machine/machines/mg0/mg0.img | # qemu-img info /root/.docker/machine/machines/mg0/mg0.img | ||

Revision as of 09:44, 15 July 2018

Contents

Alapfogalmak

hypervisor

A hypervisor or virtual machine monitor (VMM) is computer software, firmware or hardware that creates and runs virtual machines. A computer on which a hypervisor runs one or more virtual machines is called a host machine, and each virtual machine is called a guest machine. The hypervisor presents the guest operating systems with a virtual operating platform and manages the execution of the guest operating systems. Multiple instances of a variety of operating systems may share the virtualized hardware resources

- Type 1 hypervisor: hypervisors run directly on the system hardware – A “bare metal” embedded hypervisor,

- Type 2 hypervisor: hypervisors run on a host operating system that provides virtualization services, such as I/O device support and memory management.

KVM

Kernel-based Virtual Machine (KVM) is a virtualization infrastructure for the Linux kernel that turns it into a hypervisor. It was merged into the Linux kernel mainline in kernel version 2.6.20, which was released on February 5, 2007.KVM requires a processor with hardware virtualization extensions.

libvirt

libvirt is an open-source API, daemon and management tool for managing platform virtualization.[3] It can be used to manage KVM, Xen, VMware ESX, QEMU and other virtualization technologies. These APIs are widely used in the orchestration layer of hypervisors in the development of a cloud-based solution.

virsh

The virsh tool is built on the libvirt management API and operates as an alternative to the xm tool and the graphical guest Manager(virt-manager). Unprivileged users can employ this utility for read-only operations

Virtual Machine Manager (app)

The virt-manager application is a desktop user interface for managing virtual machines through libvirt. It primarily targets KVM VMs, but also manages Xen and LXC (linux containers). It presents a summary view of running domains, their live performance & resource utilization statistics. Wizards enable the creation of new domains, and configuration & adjustment of a domain’s resource allocation & virtual hardware. An embedded VNC and SPICE client viewer presents a full graphical console to the guest domain.

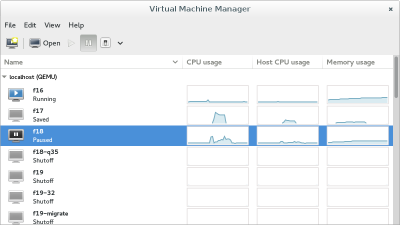

Manage machines

List machine

A virtuális gépek leíró xml-je a /etc/libvirt/qemu mappában található:

# ll /etc/libvirt/qemu total 40 drwxr-xr-x 2 root root 4096 Jun 28 20:42 autostart -rw------- 1 root root 4222 Sep 18 2016 centOS6test.xml -rw------- 1 root root 4430 Mar 3 18:56 centos7.xml -rw------- 1 root root 3006 Jul 14 17:46 mg0.xml drwx------. 3 root root 4096 Jun 22 19:26 networks -rw------- 1 root root 4246 Jan 6 2017 rhel7.2_openShift.xml

Ha egy gépnek beállítjuk, hogy automatikusan induljon el, akkor a libvirt létre fog hozni egy linket az autostart mappába

# virsh autostart mg0

# ll /etc/libvirt/qemu/autostart/ total 0 lrwxrwxrwx 1 root root 25 Jul 15 11:05 mg0.xml -> /etc/libvirt/qemu/mg0.xml

Autostart megszüntetése:

# virsh autostart mg0 --disable

A /etc/libvirt/qemu-ben található leíró xml-eket a virsh edit paranccsal lehet szerkeszteni.

# export EDITOR=mcedit # virsh edit mg0 ...

List VMs

A --all kapcsolóval a nem futó vm-eket is mutatja.

# virsh list --all Id Name State ---------------------------------------------------- 4 mg0 running - centOS6test shut off

VM info

# virsh dominfo mg0 Id: 4 Name: mg0 UUID: 8cd073a2-577a-438e-a449-681a58100fb3 OS Type: hvm State: running CPU(s): 1 CPU time: 26.7s Max memory: 1048576 KiB Used memory: 1048576 KiB Persistent: yes Autostart: disable Managed save: no Security model: none Security DOI: 0

Create machines

Manage networks

List all

List all the running networks:

# virsh net-list Name State Autostart Persistent ---------------------------------------------------------- default active yes yes docker-machines active yes yes

With the --a switch, the non running networks will be listed as well.

# virsh net-info default Name: default UUID: 3eb5cb82-b9ea-4a6e-8e54-1efea603f90c Active: yes Persistent: yes Autostart: yes Bridge: virbr0

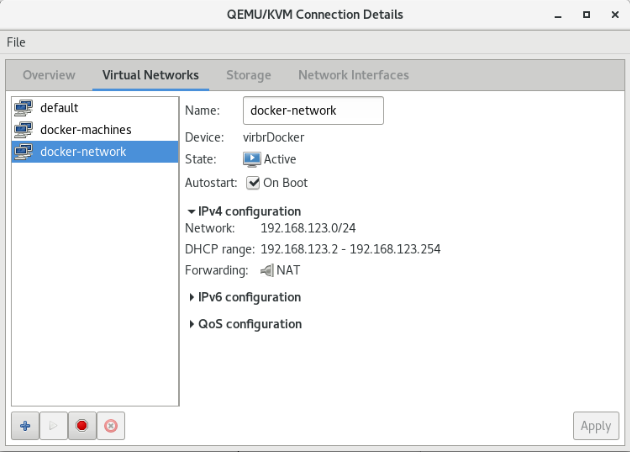

Add new network

Create the network description file: https://libvirt.org/formatnetwork.html

<network>

<name>docker-network</name>

<bridge name="virbrDocker"/>

<forward mode="nat"/>

<ip address="192.168.123.1" netmask="255.255.255.0">

<dhcp>

<range start="192.168.123.2" end="192.168.123.254"/>

</dhcp>

</ip>

<ip family="ipv6" address="2001:db8:ca2:2::1" prefix="64"/>

</network>

- name: The content of the name element provides a short name for the virtual network. This name should consist only of alpha-numeric characters and is required to be unique within the scope of a single host. It is used to form the filename for storing the persistent configuration file. Since 0.3.0

- uuid: The content of the uuid element provides a globally unique identifier for the virtual network. The format must be RFC 4122 compliant, eg 3e3fce45-4f53-4fa7-bb32-11f34168b82b. If omitted when defining/creating a new network, a random UUID is generated. Since 0.3.0

- bridge : The name attribute on the bridge element defines the name of a bridge device which will be used to construct the virtual network. The virtual machines will be connected to this bridge device allowing them to talk to each other. The bridge device may also be connected to the LAN. When defining a new network with a <forward> mode of "nat" or "route" (or an isolated network with no <forward> element), libvirt will automatically generate a unique name for the bridge device if none is given, and this name will be permanently stored in the network configuration so that that the same name will be used every time the network is started. For these types of networks (nat, routed, and isolated), a bridge name beginning with the prefix "virbr" is recommended

- forward : Inclusion of the forward element indicates that the virtual network is to be connected to the physical LAN.Since 0.3.0. The mode attribute determines the method of forwarding. If there is no forward element, the network will be isolated from any other network (unless a guest connected to that network is acting as a router, of course). The following are valid settings for mode (if there is a forward element but mode is not specified, mode='nat' is assumed):

- nat: All traffic between guests connected to this network and the physical network will be forwarded to the physical network via the host's IP routing stack, after the guest's IP address is translated to appear as the host machine's public IP address (a.k.a. Network Address Translation, or "NAT"). This allows multiple guests, all having access to the physical network, on a host that is only allowed a single public IP address.

- route:Guest network traffic will be forwarded to the physical network via the host's IP routing stack, but without having NAT applied.

Add the new network based on the new file:

# virsh net-define docker-network.xml Network docker-network defined from docker-network.xml

# virsh net-list --all Name State Autostart Persistent ---------------------------------------------------------- default active yes yes docker-machines active yes yes docker-network inactive no yes

Start the new network and make it auto start:

# virsh net-start docker-network # virsh net-autostart docker-network

Check the new network: It should be listed among the interfaces:

# ifconfig

virbrDocker: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 192.168.123.1 netmask 255.255.255.0 broadcast 192.168.123.255

...

Lets check it in the virsh interactive shell, with the net-dumpxml command:

# virsh

Welcome to virsh, the virtualization interactive terminal.

Type: 'help' for help with commands

'quit' to quit

virsh #

virsh# net-dumpxml docker-network

<network>

<name>docker-network</name>

<uuid>fe2dd1e8-c32f-469c-b4ca-4338a0acfac5</uuid>

<forward mode='nat'>

<nat>

<port start='1024' end='65535'/>

</nat>

</forward>

<bridge name='virbrDocker' stp='on' delay='0'/>

<mac address='52:54:00:9f:ff:ba'/>

<ip address='192.168.123.1' netmask='255.255.255.0'>

<dhcp>

<range start='192.168.123.2' end='192.168.123.254'/>

</dhcp>

</ip>

<ip family='ipv6' address='2001:db8:ca2:2::1' prefix='64'>

</ip>

</network>

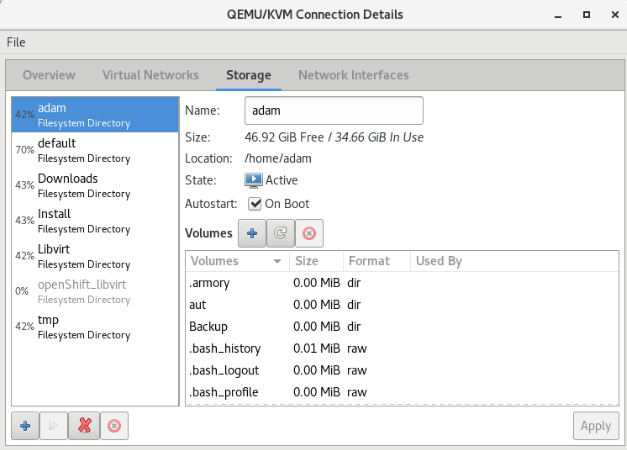

Manage sorages

https://libvirt.org/storage.html

https://www.suse.com/documentation/sles11/book_kvm/data/sec_libvirt_storage_virsh.html

https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/virtualization_deployment_and_administration_guide/sect-managing_guest_virtual_machines_with_virsh-storage_pool_commands

A storage pool is a quantity of storage set aside by an administrator, often a dedicated storage administrator, for use by virtual machines. Storage pools are divided into storage volumes either by the storage administrator or the system administrator, and the volumes are assigned to VMs as block devices.

Pools

# virsh pool-list --details Name State Autostart Persistent Capacity Allocation Available ------------------------------------------------------------------------------- adam running yes yes 81.58 GiB 34.68 GiB 46.90 GiB default running yes yes 68.78 GiB 49.12 GiB 19.66 GiB Downloads running yes yes 733.42 GiB 321.71 GiB 411.71 GiB Install running yes yes 733.42 GiB 321.71 GiB 411.71 GiB Libvirt running yes yes 81.58 GiB 34.68 GiB 46.90 GiB tmp running yes yes 81.58 GiB 34.68 GiB 46.90 GiB

# virsh pool-info default Name: default UUID: 9cfd52e2-64d7-4d55-9552-5ae6da49105b State: running Persistent: yes Autostart: yes Capacity: 68.78 GiB Allocation: 49.12 GiB Available: 19.66 GiB

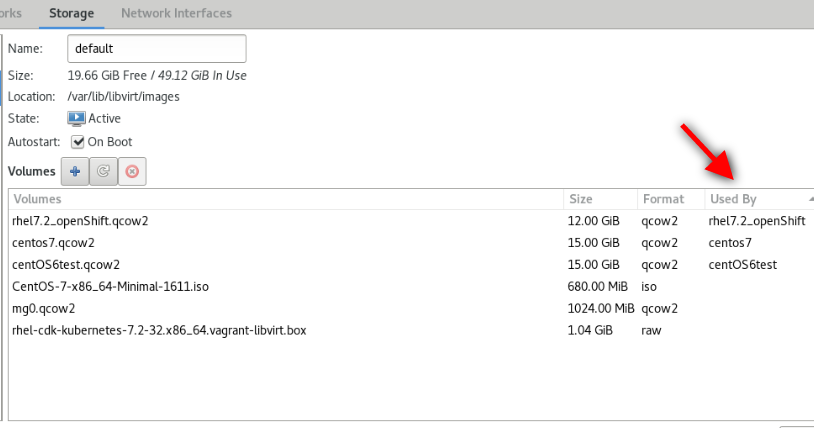

List Volumes

Ha erről másképpen nem rendelkezünk, akkor az új vm-ek a default pool-ba jönnek létre. Listázzuk a pool-ban lévő volume-okat.

# virsh vol-list --details default Name Path Type Capacity Allocation -------------------------------------------------------------------------------------------------------------------------------------------------------------------- CentOS-7-x86_64-Minimal-1611.iso /var/lib/libvirt/images/CentOS-7-x86_64-Minimal-1611.iso file 680.00 MiB 680.00 MiB centOS6test.qcow2 /var/lib/libvirt/images/centOS6test.qcow2 file 15.00 GiB 5.08 GiB centos7.qcow2 /var/lib/libvirt/images/centos7.qcow2 file 15.00 GiB 2.96 GiB mg0.qcow2 /var/lib/libvirt/images/mg0.qcow2 file 1.00 GiB 332.00 KiB rhel-cdk-kubernetes-7.2-32.x86_64.vagrant-libvirt.box /var/lib/libvirt/images/rhel-cdk-kubernetes-7.2-32.x86_64.vagrant-libvirt.box file 1.04 GiB 1.04 GiB rhel7.2_openShift.qcow2 /var/lib/libvirt/images/rhel7.2_openShift.qcow2 file 12.00 GiB 2.07 MiB

# virsh vol-info mg0.qcow2 --pool default Name: mg0.qcow2 Type: file Capacity: 1.00 GiB Allocation: 332.00 KiB

Volumes of VM

A virsh sajnos nem biztosít semmilyen eszközt arra, hogy a kilistázza hogy melyik volume-ot melyik VM használja. Ráadásul azok a volume-okat, amik nincsenek pool-ban lehet hogy semmilyen módon nem mutatja meg. A Virtual Machine Manager a pool-volume listában meg tudja mutatni, hogy melyik machine használja a volume-ot:

De van rá mód, hogy a parancssorból mi is kiderítsük ezt. Ahogy azt már láthattuk, az VM információk a /etc/libvirt/qemu mappában található XML fájlokban vannak tárolva. Ebben a fájlban szemmel is megkereshetjük a felcsatolt volume-okat, vagy egy XLST transzformációval is kinyerhetjük a kívánt sorokat a xsltproc program segítségével.

Note

xsltproc is a command line tool for applying XSLT stylesheets to XML documents

Az alábbi XLST stíluslapra lesz szükségünk: guest_storage_list.xsl

<?xml version="1.0" encoding="UTF-8"?>

<xsl:stylesheet version="1.0"

xmlns:xsl="http://www.w3.org/1999/XSL/Transform">

<xsl:output method="text"/>

<xsl:template match="text()"/>

<xsl:strip-space elements="*"/>

<xsl:template match="disk">

<xsl:text> </xsl:text>

<xsl:value-of select="(source/@file|source/@dev|source/@dir)[1]"/>

<xsl:text>

</xsl:text>

</xsl:template>

</xsl:stylesheet>

Majd xsltproc programmal a kívánt VM konfigurációs fájljára lefuttatjuk:

# xsltproc guest_storage_info.xsl /etc/libvirt/qemu/mg0.xml /root/.docker/machine/machines/mg0/mg0.img /root/.docker/machine/machines/mg0/boot2docker.iso

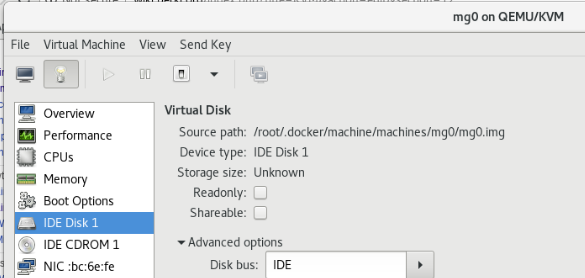

Kilistázta a virtuális merevlemezt és az installációra használt iso-t. Ezeket a Virtual Machine Manager-ben is láthatjuk:

A qemu-img paranccsal le lehet kérdeni egy adott volume részleteit:

# qemu-img info /root/.docker/machine/machines/mg0/mg0.img image: /root/.docker/machine/machines/mg0/mg0.img file format: raw virtual size: 4.9G (5242880000 bytes) disk size: 192M