Difference between revisions of "Openshift - HAproxy metrics EN"

(→Prometheus integration) |

(→Metrikák gyűjtése logokból) |

||

| Line 327: | Line 327: | ||

<br> | <br> | ||

| − | ==HAproxy log | + | ==HAproxy log structure== |

https://www.haproxy.com/blog/introduction-to-haproxy-logging/ | https://www.haproxy.com/blog/introduction-to-haproxy-logging/ | ||

<br> | <br> | ||

| − | + | HAproxy provides the following log structure for each request-response pair: | |

<pre> | <pre> | ||

Aug 6 20:53:30 192.168.122.223 haproxy[39]: 192.168.42.1:50708 [06/Aug/2019:20:53:30.267] public be_edge_http:mynamespace:test-app-service/pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.12:8080 1/0/0/321/321 200 135 - - --NI 2/2/0/1/0 0/0 "GET /test/slowresponse/1 HTTP/1.1" | Aug 6 20:53:30 192.168.122.223 haproxy[39]: 192.168.42.1:50708 [06/Aug/2019:20:53:30.267] public be_edge_http:mynamespace:test-app-service/pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.12:8080 1/0/0/321/321 200 135 - - --NI 2/2/0/1/0 0/0 "GET /test/slowresponse/1 HTTP/1.1" | ||

| Line 372: | Line 372: | ||

<br> | <br> | ||

| − | + | Full specification: https://github.com/berkiadam/haproxy-metrics/blob/master/ha-proxy-log-structure.pdf | |

<br> | <br> | ||

<br> | <br> | ||

| − | ==grok-exporter | + | ==grok-exporter introduction== |

| − | + | Grok-exporter is a tool that can process logs based on regular expressions to produce 4 basic types of prometheus metrics: | |

* gauge | * gauge | ||

* counter | * counter | ||

| Line 384: | Line 384: | ||

* kvantilis | * kvantilis | ||

| − | + | You can set any number of tags for the metric using the parsed log string elements. Grok-exporter is based on the implementation of '' 'logstash-grok' ', using patterns and functions defined in logstash. | |

| − | + | Detailed documentation at: <br> | |

https://github.com/fstab/grok_exporter/blob/master/CONFIG.md<br> | https://github.com/fstab/grok_exporter/blob/master/CONFIG.md<br> | ||

<br> | <br> | ||

| − | + | The grok-exporter can handle three types of inputs: | |

| − | * '''file''': | + | * '''file''': we will use this, it will process the log written by rsyslog. |

| − | * '''webhook''': | + | * '''webhook''': This solution could also be used if we were using logstash for the rsyslog server and then sending the grok-exporter to the webhook with the logstash plugin "http-output" unto. |

| − | * '''stdin''': | + | * '''stdin''': With rsyslog, stdin could also be used. This requires the use of the '' 'omprog' '' program. Omprog is able to pass on stdin to a program it reads from rsyslog socket. The program will be restarted by omprog if it is no longer running. https://www.rsyslog.com/doc/v8-stable/configuration/modules/omprog.html |

<br> | <br> | ||

| − | === | + | === Alternative Solutions === |

| − | '''Fluentd''' | + | '''Fluentd''' <br> |

| − | + | '''Fluentd''' also solves the problem. To do this, you need to use three fluentd plugins (I haven't tried this): | |

* fluent-plugin-rewrite-tag-filter | * fluent-plugin-rewrite-tag-filter | ||

* fluent-plugin-prometheus | * fluent-plugin-prometheus | ||

| Line 408: | Line 408: | ||

'''mtail''':<br> | '''mtail''':<br> | ||

| − | + | The other alternative would be google's '' mtail '' project, which is supposed to be a resource more efficient in processing logs than the grok engine.<br> | |

https://github.com/google/mtail | https://github.com/google/mtail | ||

| Line 414: | Line 414: | ||

<br> | <br> | ||

| − | === | + | ===Configuration file=== |

| − | + | The configuration of grok-exporter can be found in '''/etc/grok_exporter/config.yml'''. There are 5 sections. | |

* global: | * global: | ||

| − | * input: | + | * input: Tells you where and how to retrieve logs. Can be stdin, file and webhook. We will use the file input. |

| − | * grok: | + | * grok: Location of the grok patterns. The Docker image will have this / grok / patterns folder. |

| − | * metrics: | + | * metrics: This is the most important part. Here you need to define the metrics and the associated regular expression (in the form of grok patterns) |

| − | * server: | + | * server: What port the server should listen to. |

<br> | <br> | ||

====Metrics==== | ====Metrics==== | ||

| − | + | Metrics must be defined by metric type. The four basic types of prometheus metrics are supported: '' 'Gauge, Counter, Histogram, Summary' '' (quantile) | |

| − | + | Below the type you must specify: | |

| − | * name: | + | * name: This will be the name of the metric |

| − | * help: | + | * help: This will be the help text for the metric. |

| − | * match: | + | * match: Describe the structure of the log string like a regular expression to which the metrics should fit. Here you can use pre-defined grok patterns: |

| − | ** ''' | + | ** '' 'BASIC grok patterns' '': https://github.com/logstash-plugins/logstash-patterns-core/blob/master/patterns/grok-patterns |

| − | ** '''HAROXY patterns''': https://github.com/logstash-plugins/logstash-patterns-core/blob/master/patterns/haproxy | + | ** '' 'HAROXY patterns' '': https://github.com/logstash-plugins/logstash-patterns-core/blob/master/patterns/haproxy |

| − | * label: | + | * label: You can name the result groups. The name can be referenced in the label section, which will create a label whose value will be the parsed data. |

<br> | <br> | ||

| − | ====match==== | + | ==== match ==== |

| − | + | In match, you have to write a regular expression from grok building cubes. It is assumed that each element is separated by a pause in the log. Each build cube has the shape '' '% {PATTERN NAME}' '' where PATTERN NAME must exist in a pattern collection. The most common type is '' '% {DATA}' '', which refers to an arbitrary data structure that does not contain a break. There are several patterns that are combined from multiple elementary patterns. If you want the regular expression described by the pattern to be a result group, you must name the patterns, for example: | |

| − | < | + | <Pre> |

| − | %{DATA | + | % {DATA} this_is_the_name |

| − | </ | + | </Pre> |

| − | + | The value of the field found by the pattern will then be included in the variable '' 'this_is_the_name' '', which can be referenced when defining the value of the metric or when producing the label. | |

<br> | <br> | ||

| − | ====labels==== | + | ==== labels ==== |

| − | + | You can refer to patterns named in the labels section. This will give the value of the field parsed from the given log string to the defined label. For example, using '' '% {DATA: this_is_the_name}' '' pattern, you could write the following tag: <br> | |

| − | < | + | <Pre> |

mylabel: '{{.this_is_the_name}}' | mylabel: '{{.this_is_the_name}}' | ||

| − | </ | + | </Pre> |

| − | + | Then, if the field described by the% {DATA} pattern was 'myvalue', then the metric would be labeled with the following: '' '{mylabel = "myvalue"}' '' <br> | |

| − | + | Let's look at an example: <br> | |

| − | + | The following log line is given: | |

| − | < | + | <Pre> |

| − | 30 | + | 7/30/2016 2:37:03 PM adam 1.5 |

| − | </ | + | </Pre> |

| − | + | And the following metric rule in grok config: | |

<source lang="C++"> | <source lang="C++"> | ||

metrics: | metrics: | ||

| Line 465: | Line 465: | ||

user: '{{.user}}' | user: '{{.user}}' | ||

</source> | </source> | ||

| − | + | The metric will be named '' 'grok_example_lines_total' ''. The metrics will be: | |

<pre> | <pre> | ||

# HELP Example counter metric with labels. | # HELP Example counter metric with labels. | ||

| Line 473: | Line 473: | ||

<br> | <br> | ||

| − | ==== | + | ==== Determine the value of a metric ==== |

| − | + | For a counter-type metric, you do not need to determine the value of the metric, because it will count the number of matching logs found. In contrast, for all other types, you have to specify what is considered a value. This should be specified in the '' 'value' '' section, where a named grok pattern from the match section must be referenced in the same way as Go templates as defined in the tags. Eg the following two log lines are given: | |

| − | < | + | <Pre> |

| − | 30 | + | 7/30/2016 2:37:03 PM adam 1 |

| − | 30 | + | 7/30/2016 2:37:03 PM Adam 5 |

| − | </ | + | </Pre> |

| − | + | And for this we define the following histogram, which consists of two buckets, buckets 1 and 2: | |

<source lang="C++"> | <source lang="C++"> | ||

metrics: | metrics: | ||

| Line 491: | Line 491: | ||

user: '{{.user}}' | user: '{{.user}}' | ||

</source> | </source> | ||

| − | + | This will result in the following metrics: | |

<pre> | <pre> | ||

# HELP Example counter metric with labels. | # HELP Example counter metric with labels. | ||

| Line 503: | Line 503: | ||

<br> | <br> | ||

| − | ==== | + | ==== Functions ==== |

| − | + | You can apply functions to the values of the metric (values) and to the tags. Functions must be grok-exporter version '' '0.2.7' '' or later. String manipulation functions and arithmetic functions can also be used. The following two arguments arithmetic functions are supported: | |

* add | * add | ||

* subtract | * subtract | ||

* multiply | * multiply | ||

* divide | * divide | ||

| − | + | The function has the following syntax: <pre> {{FUNCTION_NAME ATTR1 ATTR2}} </pre> where ATTR1 and ATTR2 can be either a value derived from a pattern or a natural number. The values obtained from the pattern should be written in the same way. Eg if we use the multiply function in the example above: | |

| − | <source lang="C++"> | + | <source lang = "C ++"> |

| − | + | value: "{{multiply .val 1000}}" | |

</source> | </source> | ||

| − | + | Then the metric changes to: | |

| − | < | + | <Pre> |

# HELP Example counter metric with labels. | # HELP Example counter metric with labels. | ||

# TYPE grok_example_lines histogram | # TYPE grok_example_lines histogram | ||

| − | grok_example_lines_bucket{user="adam", le="1"} 0 | + | grok_example_lines_bucket {user = "adam", le = "1"} 0 |

| − | grok_example_lines_bucket{user="adam", le="2"} 0 | + | grok_example_lines_bucket {user = "adam", le = "2"} 0 |

| − | grok_example_lines_bucket{user="adam", le="+Inf"} 2 | + | grok_example_lines_bucket {user = "adam", le = "+ Inf"} 2 |

... | ... | ||

| − | </ | + | </Pre> |

| − | + | Since the two values will change to 1000 and 5000 respectively, both will fall into the infinite category. | |

<br> | <br> | ||

<br> | <br> | ||

| − | ==grok config | + | == Creating a grok config file == |

| − | + | You need to compile a grok pattern that fits in the HAproxy access-log lines and can extract all the attributes that are important to us: | |

| − | * | + | * total time to respond |

* haproxy instance id | * haproxy instance id | ||

| − | * openshfit service | + | * openshfit service namespace |

| − | * pod | + | * pod name |

<br> | <br> | ||

| − | + | Example haproxy access-log: | |

| − | < | + | <Pre> |

| − | Aug | + | Aug 6 20:53:30 192.168.122.223 haproxy [39]: 192.168.42.1:50708 [06 / Aug / 2019: 20: 53: 30.267] public be_edge_http: mynamespace: test-app-service / pod: test-app- 57574c8466-qbtg8: test-app-service: 172.17.0.12: 8080 1/0/0/321/321 200 135 - - --NI 2/2/0/1/0 0/0 "GET / test / slowresponse / 1 HTTP / 1.1 " |

| − | </ | + | </Pre> |

| − | + | In the config.yml file, we will define a histogram that contains the response time for full requests. This is a classic histogram, usually containing the following buckets (in seconds): | |

| − | < | + | <Pre> |

[0.1, 0.2, 0.4, 1, 3, 8, 20, 60, 120] | [0.1, 0.2, 0.4, 1, 3, 8, 20, 60, 120] | ||

| − | </ | + | </Pre> |

| − | + | Response time metrics by convention are called '' '<prefix> _http_request_duration_seconds' '' | |

| − | |||

'''config.yml''' | '''config.yml''' | ||

| Line 575: | Line 574: | ||

| − | * '''type: file''' -> | + | * '''type:file''' -> read logs from file |

| − | * '''path: /var/log/messages''' -> | + | * '''path: /var/log/messages''' -> The rsyslog server writes logs to /var/log/messages by default |

| − | * '''readall: true''' | + | * '''readall: true''' -> always reads the entire log file. This should only be used for testing, in a live environment, and should always be set to false. |

| − | * '''patterns_dir: ./patterns''' | + | * '''patterns_dir: ./patterns''' -> Pattern definitions can be found in the docker image |

| − | * <pre>value: "{{divide .Tt 1000}}"</pre> | + | * <pre> value: "{{divide .Tt 1000}}" </pre> The serving time in the HAproxy log is in milliseconds and must be converted to seconds. |

| − | * '''port: 9144''' -> | + | * '''port: 9144''' -> This port will provide the /metrics endpoint. |

<br> | <br> | ||

| − | {{warning| | + | {{warning | do not forget to set the value of '' 'readall' 'to' '' false '' in a live environment as this will greatly reduce efficiency}} |

<br> | <br> | ||

<br> | <br> | ||

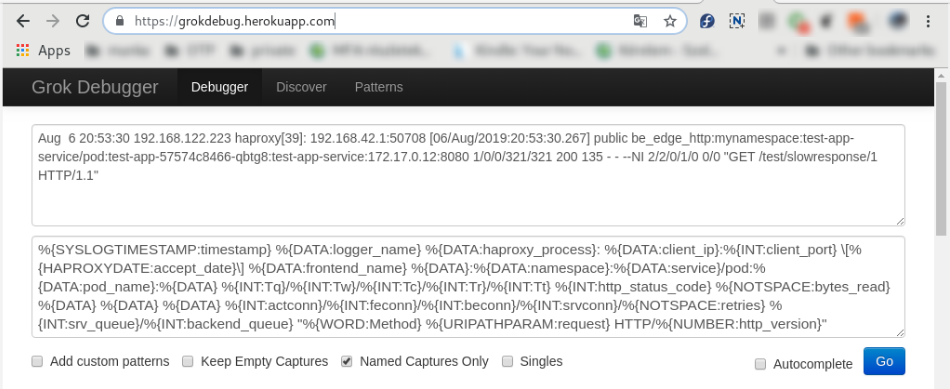

| − | ===Online grok | + | === Online grok tester === |

| − | + | There are several online grok testing tools. These can be used to compile the required grok pattern very effectively: https://grokdebug.herokuapp.com/ | |

| − | |||

:[[File:ClipCapIt-190808-170333.PNG]] | :[[File:ClipCapIt-190808-170333.PNG]] | ||

| Line 593: | Line 591: | ||

<br> | <br> | ||

| − | ==docker image | + | == making docker image == |

| − | + | The grok-exporter docker image is available on the docker hub in several versions. The only problem with them is that they do not include the rsyslog server, what we need is for HAproxy to send logs directly to the grok-exporter podokank. <br> | |

docker-hub link: https://hub.docker.com/r/palobo/grok_exporter <br> | docker-hub link: https://hub.docker.com/r/palobo/grok_exporter <br> | ||

<br> | <br> | ||

| − | + | The second problem is that they are based on an ubuntu base image, where it is very difficult to get rsyslog to log on to stdout, which requires the Kubernetets centralized log collector to receive HAproxy logs, so both monitoring and centralized logging can be served. Thousands of the original Dockerfile will be ported to '' 'centos 7' '' and will be supplemented with the installation of the rsyslog server. | |

<br> | <br> | ||

| − | + | All necessary files are available on git-hub: https://github.com/berkiadam/haproxy-metrics/tree/master/grok-exporter-centos <br> | |

| − | + | I also created an ubuntu based solution, which is an extension of the original docker-hub solution, which can also be found on git-hub in the '' 'grok-exporter-ubuntu folder' ''. For the rest of the howot, we will always use the cent version. | |

<br> | <br> | ||

<br> | <br> | ||

| − | ===Dockerfile=== | + | === Dockerfile === |

| − | + | We will start with '' 'palobo / grok_exporter' '' Dockerfile, but will complement it with the rsyslog installation and port it to centos: https://github.com/berkiadam/haproxy-metrics/tree/master/grok- CentOS-exporter | |

<br> | <br> | ||

| − | + | ➲[[File:Grok-exporter-docker-build.zip|Download all files required for Docker image build]] | |

| − | ➲[[File:Grok-exporter-docker-build.zip| | ||

<br> | <br> | ||

| Line 645: | Line 642: | ||

CMD sh -c "nohup /usr/sbin/rsyslogd -i ${PID_DIR}/pid -n &" && ./grok_exporter -config /grok/config.yml | CMD sh -c "nohup /usr/sbin/rsyslogd -i ${PID_DIR}/pid -n &" && ./grok_exporter -config /grok/config.yml | ||

</source> | </source> | ||

| − | {{note| | + | {{note | It is important that we use at least version 0.2.7 of grok-exporter, the function handling first appeared}} |

<br> | <br> | ||

<br> | <br> | ||

| − | + | The '''rsyslog.conf''' file must be accompanied by the following, which allows you to receive logos on port 514 on both UDP and TCP (see zip above for details), and that write all logs to stdout and /var/log/messages. | |

<pre> | <pre> | ||

$ModLoad omstdout.so | $ModLoad omstdout.so | ||

| Line 667: | Line 664: | ||

<br> | <br> | ||

| − | === | + | === Local build and local test === |

| − | + | First, we will build the docker image with the local docker daemon so that we can run it locally for testing. Later we will build this on the minishfit VM, since we will only be able to upload it to the minishfit docker registry from there. Since we will be uploading the image to a remote (not local) docker repository, it is important to follow the naming conventions: | |

| − | < | + | <Pre> |

| − | <repo URL>:<repo port>/< | + | <repo URL>: <repo port> / <namespace> / <image-name>: <tag> |

| − | </ | + | </ Pre> |

| − | + | We will upload the image to the docker registry running on the minishift, so it is important to specify the address and port of the minishfit-docker registry and the OpenShift namespace where the image will be placed. | |

| − | < | + | <Pre> |

| − | # docker build -t 172.30.1.1:5000/default/grok_exporter:1.1.0 . | + | # docker build -t 172.30.1.1:5000/default/grok_exporter:1.1.0. |

| − | </ | + | </Pre> |

| − | + | The resulting image can be tested by running a native, local docker. Create a haproxy test log file ('' 'haproxy.log' '') with the following content in it. This will be processed by the grok-exporter, as if it had been provided by haproxy. | |

<pre> | <pre> | ||

Aug 6 20:53:30 192.168.122.223 haproxy[39]: 192.168.42.1:50708 [06/Aug/2019:20:53:30.267] public be_edge_http:mynamespace:test-app-service/pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.12:8080 1/0/0/321/321 200 135 - - --NI 2/2/0/1/0 0/0 "GET /test/slowresponse/1 HTTP/1.1" | Aug 6 20:53:30 192.168.122.223 haproxy[39]: 192.168.42.1:50708 [06/Aug/2019:20:53:30.267] public be_edge_http:mynamespace:test-app-service/pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.12:8080 1/0/0/321/321 200 135 - - --NI 2/2/0/1/0 0/0 "GET /test/slowresponse/1 HTTP/1.1" | ||

| Line 686: | Line 683: | ||

<br> | <br> | ||

| − | + | Put the grok file '' 'config.yml' '' created above in the same folder. In the config.yml file, change the input.path to '' '/grok/haproxy.log' '' so that the grok-exporter processes our test log file. Then start it with a '' 'docker run' 'command: | |

| − | < | + | <Pre> |

| − | # docker run -d -p 9144:9144 -p 514:514 -v $(pwd)/config.yml:/etc/grok_exporter/config.yml -v $(pwd)/haproxy.log:/grok/haproxy.log --name grok 172.30.1.1:5000/default/grok_exporter:1.1.0 | + | # docker run -d -p 9144: 9144 -p 514: 514 -v $ (pwd) /config.yml:/etc/grok_exporter/config.yml -v $ (pwd) /haproxy.log:/grok/haproxy. log --name grok 172.30.1.1:5000/default/grok_exporter:1.1.0 |

| − | </ | + | </Pre> |

<br> | <br> | ||

| − | + | After starting, check in log that grok and rsyslog are actually started: | |

| − | < | + | <Pre> |

# docker logs grok | # docker logs grok | ||

| − | + | * Starting enhanced syslogd rsyslogd | |

| − | + | ... done. | |

| − | Starting server | + | Starting server is http: // 7854f3a9fe76: 9144 / metrics |

| − | </ | + | </Pre> |

<br> | <br> | ||

| − | + | Metrics are then available in the browser at http: // localhost: 9144 / metrics: | |

<pre> | <pre> | ||

... | ... | ||

| Line 722: | Line 719: | ||

<br> | <br> | ||

<br> | <br> | ||

| − | + | As a second step, verify that the '' 'rsyslog' 'running in the docker container can receive these remote log messages. To do this, first enter the container and look for the /var/log/messages file: | |

<pre> | <pre> | ||

# docker exec -it grok /bin/bash | # docker exec -it grok /bin/bash | ||

Revision as of 22:11, 19 November 2019

Openshift - HAproxy metrics HU

Contents

- 1 Preliminary

- 2 Using HAproxy Metric Endpoint

- 3 Metrikák gyűjtése logokból

- 4 OpenShift router + rsyslog

Preliminary

Overview

In OpenShift 3.11, the default router is the HAProxy template router. This is based on the of the 'openshift3 / ose-haproxy-router' image. The image runs two components inside a container, one is HAproxy itself and the other is the router controller, the tempalte-router-plugin, which maintains the HAproxy configuration. The router POD listens on the host machine's network interface and directs external requests to the appropriate pod within the OpenShfit cluster. Unlike Kubernetes Ingress, OS routers do not have to run on all nodes, they are installed just on dedicated nodes, so external traffic must be directed to the public IP address of these nodes.

HAProxy provides standard prometheus metrics through the router's Kubernetes service. The problem is that only a very small part of the metrics provided by HAproxy can be used meaningfully. Unfortunately, the http response-time metric is calculated from the average of the last 1024 requests, making it completely unsuitable for real-time monitoring purposes.

Real requests and responses information is only provided in the HAProxy acces-log, and only in debug mode, but it is really detailed, it contains all parameters of the requests / responses. These logs can be used to generate prometheus metrics using multiple tools (e.g. grok-exporter, Fluentd, Logstash).

Haproxy main configuration

The HAproxy configuration file is located in '/var/lib/haproxy/conf/haproxy.config'. It contains all the services that are configured in the router.

/var/lib/haproxy/conf/haproxy.config

global ... log 127.0.0.1 local1 debug backend config: ##-------------- app level backends ---------------- .... backend be_edge_http:mynamespace:test-app-service mode http ... server pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.12:8080 172.17.0.12:8080 cookie babb614345c3670472f80ce0105a11b0 weight 256

The backends that belong to the route are listed in the app level backends section. You can see in the example that the backend called test-app-service is available at 172.17.0.12:8080.

Git repository

All the required resources used in the following implementation are available in the following git repository: https://github.com/berkiadam/haproxy-metrics

Http test application

To generate the http traffic, I made a test application that can generate arbitrary response time and http response code requests. Source available at https://github.com/berkiadam/haproxy-metrics/tree/master/test-app

Kubernetes files can be found at the root of the git repository.

Once installed, the app is available at:

- http://test-app-service-mynamespace.192.168.42.185.nip.io/test/slowresponse/ <delay in millisecundum>

- http://test-app-service-mynamespace.192.168.42.185.nip.io/test/slowresponse/ <delay in milliseconds> / <http response code>

Using HAproxy Metric Endpoint

HAproxy has a built-in metric endpoint, which by default provides Prometheus-standard metrics (you can still CSV). Most of the metrics you get are not really meaningful metrics. There are two metrics that can be extracted here, which are definitely to be observed in prometheus, broken down into backscatter and 200 response counters.

The metric query endpoint (metrics) is on by default. This can be turned off, but HAProxy will still collect metrics from it. The HAproxy pod is made up of two components. One is HAproxy itself and the other is the router-controller that manages the HAproxy configuration. Metrics are collected from both components every 5 seconds by the metric manager. Metrics include frontend and backend metrics collected by separate services.

Query Metrics

There are two ways to query metrics.

- username + password: Basic authentication calls the / metrics endpoint to query the metrics.

- Defining RBAC Rules for the appropriate serviceAccount: For machine processing (Prometheus) it is possible to enable RBAC rules for a given service account to query the metrics.

User + password based query

For a user name query, the default metric URL is:

http: // <user>: <password> @ <router_IP> <STATS_PORT> / metrics </ Pre> The user, password, and port metrics are in the service definition for the router. To do this, you first need to find the router service: <pre> # kubectl get svc -n default NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE router ClusterIP 172.30.130.191 <none> 80/TCP,443/TCP,1936/TCP 4d

You can see that it is listening on port '1936' ', which is the port of the endpoint of the metric.

Now, let's look at the service definition to get the user and pass:

# kubectl get svc router -n default -o yaml

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.openshift.io/password: 4v9a7ucfMi

prometheus.openshift.io/username: admin

...

Depending on this, using the node's IP address, (minishfit IP), the URL for the metric is: http: // admin: 4v9a7ucfMi@192.168.42.64: 1936 / metrics (This browser cannot be called because it is not familiar with this format)

# curl admin:4v9a7ucfMi@192.168.42.64:1936/metrics

# HELP apiserver_audit_event_total Counter of audit events generated and sent to the audit backend.

# TYPE apiserver_audit_event_total counter

apiserver_audit_event_total 0

# HELP apiserver_client_certificate_expiration_seconds Distribution of the remaining lifetime on the certificate used to authenticate a request.

# TYPE apiserver_client_certificate_expiration_seconds histogram

apiserver_client_certificate_expiration_seconds_bucket{le="0"} 0

apiserver_client_certificate_expiration_seconds_bucket{le="21600"} 0

...

ServiceAccount based query

It is possible to query the HAproxy metrics not only with basic authentication, but also with RBAC rules.

You need to create a 'ClusterRole' 'that allows you to initiate a query at the' routers / metrics endpoint. This will be mapped to serviceAccount running prometheus later.

cr-prometheus-server-route.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app: prometheus

component: server

release: prometheus

name: prometheus-server-route

rules:

- apiGroups:

- route.openshift.io

resources:

- routers/metrics

verbs:

- get

The second step is to create a 'ClusterRoleBinding' that binds the serviceAccount belonging to the prometheus with the phenite role.

crb-prometheus-server-route.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app: prometheus

chart: prometheus-8.14.0

component: server

release: prometheus

name: prometheus-server-route

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus-server-route

subjects:

- kind: ServiceAccount

name: prometheus-server

namespace: mynamespace

Let's create the two above objects:

# kubectl apply -f cr-prometheus-server-route.yaml clusterrole.rbac.authorization.k8s.io/prometheus-server-route created # kubectl apply -f crb-prometheus-server-route.yaml clusterrolebinding.rbac.authorization.k8s.io/prometheus-server-route created

Prometheus integration

Look for the definition of 'Endpoint' for routers. This will be added to the prometheus configuration so you can search for all router pods. We will look for an endpoint called 'router' and have a port called '1936-tcp' through which we will query the HAproxy metrics via the default metric endpoint (/ metrics).

# kubectl get Endpoints router -n default -o yaml

apiVersion: v1

kind: Endpoints

metadata:

creationTimestamp: "2019-07-09T20:26:25Z"

labels:

router: router

name: router

subsets:

ports:

- name: 1936-tcp

In the Promethues configuration, you need to add a new 'target' 'in which to find the' Endpoint 'named' router and with 'kubernetes_sd_configs' . Port of '1936-tcp' .

- job_name: 'openshift-router'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/service-ca.crt

server_name: router.default.svc

bearer_token_file: /var/run/secrets/kubernetes.io/scraper/token

kubernetes_sd_configs:

- role: endpoints

namespaces:

names:

- default

relabel_configs:

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: router;1936-tcp

Update the 'ConfigMap' of your Prometheus configuration.

# kubectl apply -f cm-prometheus-server-haproxy.yaml configmap/prometheus-server created

Let's see in the prometheus pod that the configuration has been reloaded:

# kubectl describe pod prometheus-server-75c9d576c9-gjlcr -n mynamespace Containers: prometheus-server-configmap-reload: ... prometheus-server:

Let's look at the logs in the side card container running on the Promethues pod (responsible for reloading the configuration). You should see that you have reloaded the configuration.

# kubectl logs -c prometheus-server-configmap-reload prometheus-server-75c9d576c9-gjlcr -n mynamespace 2019/07/22 19:49:40 Watching directory: "/etc/config" 2019/07/22 20:25:36 config map updated 2019/07/22 20:25:36 successfully triggered reload

The same thing is to be seen when looking at the promethues server container log:

# kubectl logs -c prometheus-server prometheus-server-75c9d576c9-gjlcr -n mynamespace ... level = info ts = 2019-07-22T20: 25: 36.016Z caller = main.go: 730 msg = "Loading configuration file" filename = / etc / config / prometheus.yml

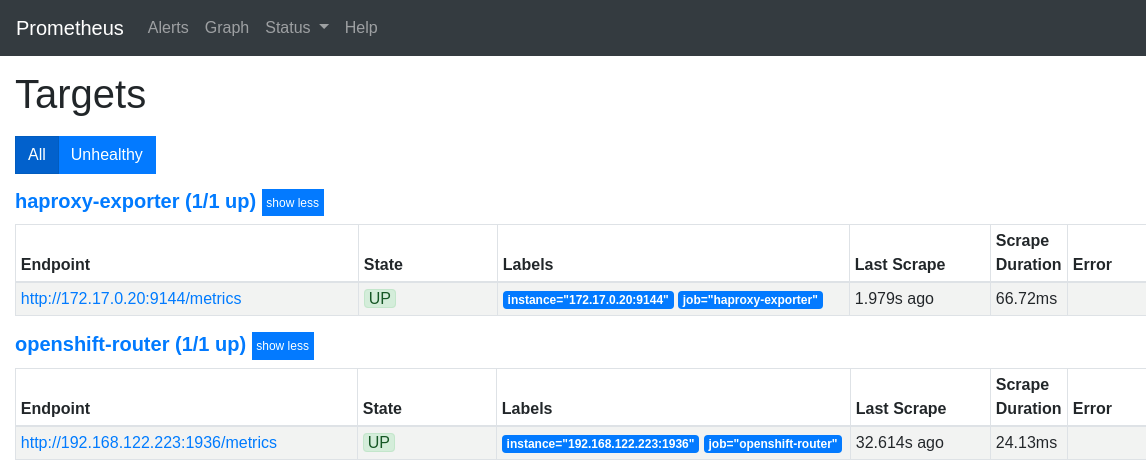

Next, open the Promethues target page on the console: http://mon.192.168.42.185.nip.io/targets

If there were more routers in the cluster, they would all appear here as separate endpoints.

Metric varieties

At first glance, there are two meaningful metrics in HAproxy's repertoire. These are:

haproxy_server_http_responses_total

Shows per backend how many women responded with 200 and 500 with http status for a given service. There is no pod based breakdown here. Unfortunately, we do not receive information on http 300 and 400 errors. We will also get these from the access log

Let's generate a 200 answer using the test application. We need to see the counter grow by one: http://test-app-service-mynamespace.192.168.42.185.nip.io/test/slowresponse/1/200

haproxy_server_http_responses_total {code = "2xx", Job = "openshift router" namespace = "mynamespace" pod = "body-app", route = "body-app-service" service = "body-app-service"} 1

Let's generate a 500 answer using the test application. We need to see the counter grow by one: http://test-app-service-mynamespace.192.168.42.185.nip.io/test/slowresponse/1/500

haproxy_server_http_responses_total {code = "5xx" job = "openshift router" namespace = "mynamespace" pod = "body-app", route = "body-app-service" service = "body-app-service"} 1

haproxy_server_response_errors_total

haproxy_server_response_errors_total{instance="192.168.122.223:1936",job="openshift-router",namespace="mynamespace",pod="test-app-57574c8466-pvcsg",route="test-app-service",server="172.17.0.17:8080",service="test-app-service"}

Metrikák gyűjtése logokból

Overview

The task is to process the access log of HAproxy with a log interpreter and generate Prometheus metrics that must be made available to Prometheus at an endpoint. We will use the grok-exporter tool, which can do this in one person. It can read logs from a file or stdin and generate metrics from it. The grok-exporter will receive the logs from HAproxy via a packaged rsyslog server. Rsyslog puts logs into a file from which grok-exporter will be able to read them. Grok-exporter converts logs into promethues metrics.

- You need to create a docker image from grok-exporter that has rsyslog. The container must be able to run rsyslog as root, which requires extra openShfit configuration.

- The grok-exporter image must be run on OpenShfit with both the grok-exporter configuration configured in ConfigMap and the rsyslog workspace with an OpenSfhit volume.

- For grok-exporter deployment, you need to create a ClasterIP-type service that can perform load-balancing between grok-exporter pods.

- Routers (HAproxy) should be configured to log in debug mode and send the resulting access log to port 514 of the grok-exporter service.

- The rsyslog server running on the grok-exporter pod puts the received HAproxy access logs into the file '/ var / log / messages' '(emptyDir type volume) and sends it to' stdout .

- Logs written to stdout will be collected by the doc-log-driver and forwarded to the centralized log architecture.

- The grok-exporter program reads '/ var / log / messages' , generates prometheus metrics from its HAproxy access-logs.

- The configuration of promethues should be configured to use 'kubernetes_sd_configs' to directly invoke the grok proxy pods to collect the metric, not to go through the service to bypass load-balancing, since everything pod needs to be queried.

HAproxy log structure

https://www.haproxy.com/blog/introduction-to-haproxy-logging/

HAproxy provides the following log structure for each request-response pair:

Aug 6 20:53:30 192.168.122.223 haproxy[39]: 192.168.42.1:50708 [06/Aug/2019:20:53:30.267] public be_edge_http:mynamespace:test-app-service/pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.12:8080 1/0/0/321/321 200 135 - - --NI 2/2/0/1/0 0/0 "GET /test/slowresponse/1 HTTP/1.1"

Field Format Extract from the example above

1 Log writing date: Aug 6 20:53:30

2 HAproxy instant name: 192.168.122.223

3 process_name '[' pid ']:' haproxy[39]:

4 client_ip ':' client_port 192.168.42.1:50708

5 '[' request_date ']' [06/Aug/2019:20:53:30.267]

6 frontend_name public

7 backend_name '/' server_name be_edge_http:mynamespace:test-app-service....

8 TR '/' Tw '/' Tc '/' Tr '/' Ta* 1/0/0/321/321

9 status_code 200

10 bytes_read* 135

11 captured_request_cookie -

12 captured_response_cookie -

13 termination_state --NI

14 actconn '/' feconn '/' beconn '/' srv_conn '/' retries* 1/1/1/1/0

15 srv_queue '/' backend_queue 0/0

16 '"' http_request '"' "GET /test/slowresponse/1 HTTP/1.1"

- Tq: total time in milliseconds spent waiting for the client to send a full HTTP request, not counting data

- Tw: total time in milliseconds spent waiting in the various queues

- Tc: total time in milliseconds spent waiting for the connection to establish to the final server, including retries

- Tr: total time in milliseconds spent waiting for the server to send a full HTTP response, not counting data

- Tt: total time in milliseconds elapsed between the accept and the last close. It covers all possible processings

- actconn: total number of concurrent connections on the process when the session was logged

- feconn: total number of concurrent connections on the frontend when the session was logged

- beconn: total number of concurrent connections handled by the backend when the session was logged

- srv conn: total number of concurrent connections still active on the server when the session was logged

- retries: number of connection retries experienced by this session when trying to connect to the server

Full specification: https://github.com/berkiadam/haproxy-metrics/blob/master/ha-proxy-log-structure.pdf

grok-exporter introduction

Grok-exporter is a tool that can process logs based on regular expressions to produce 4 basic types of prometheus metrics:

- gauge

- counter

- histogram

- kvantilis

You can set any number of tags for the metric using the parsed log string elements. Grok-exporter is based on the implementation of 'logstash-grok' ', using patterns and functions defined in logstash.

Detailed documentation at:

https://github.com/fstab/grok_exporter/blob/master/CONFIG.md

The grok-exporter can handle three types of inputs:

- file: we will use this, it will process the log written by rsyslog.

- webhook: This solution could also be used if we were using logstash for the rsyslog server and then sending the grok-exporter to the webhook with the logstash plugin "http-output" unto.

- stdin: With rsyslog, stdin could also be used. This requires the use of the 'omprog' program. Omprog is able to pass on stdin to a program it reads from rsyslog socket. The program will be restarted by omprog if it is no longer running. https://www.rsyslog.com/doc/v8-stable/configuration/modules/omprog.html

Alternative Solutions

Fluentd

Fluentd also solves the problem. To do this, you need to use three fluentd plugins (I haven't tried this):

- fluent-plugin-rewrite-tag-filter

- fluent-plugin-prometheus

- fluent-plugin-record-modifier.

mtail:

The other alternative would be google's mtail project, which is supposed to be a resource more efficient in processing logs than the grok engine.

https://github.com/google/mtail

Configuration file

The configuration of grok-exporter can be found in /etc/grok_exporter/config.yml. There are 5 sections.

- global:

- input: Tells you where and how to retrieve logs. Can be stdin, file and webhook. We will use the file input.

- grok: Location of the grok patterns. The Docker image will have this / grok / patterns folder.

- metrics: This is the most important part. Here you need to define the metrics and the associated regular expression (in the form of grok patterns)

- server: What port the server should listen to.

Metrics

Metrics must be defined by metric type. The four basic types of prometheus metrics are supported: 'Gauge, Counter, Histogram, Summary' (quantile) Below the type you must specify:

- name: This will be the name of the metric

- help: This will be the help text for the metric.

- match: Describe the structure of the log string like a regular expression to which the metrics should fit. Here you can use pre-defined grok patterns:

- 'BASIC grok patterns' : https://github.com/logstash-plugins/logstash-patterns-core/blob/master/patterns/grok-patterns

- 'HAROXY patterns' : https://github.com/logstash-plugins/logstash-patterns-core/blob/master/patterns/haproxy

- label: You can name the result groups. The name can be referenced in the label section, which will create a label whose value will be the parsed data.

match

In match, you have to write a regular expression from grok building cubes. It is assumed that each element is separated by a pause in the log. Each build cube has the shape '% {PATTERN NAME}' where PATTERN NAME must exist in a pattern collection. The most common type is '% {DATA}' , which refers to an arbitrary data structure that does not contain a break. There are several patterns that are combined from multiple elementary patterns. If you want the regular expression described by the pattern to be a result group, you must name the patterns, for example:

% {DATA} this_is_the_name

The value of the field found by the pattern will then be included in the variable 'this_is_the_name' , which can be referenced when defining the value of the metric or when producing the label.

labels

You can refer to patterns named in the labels section. This will give the value of the field parsed from the given log string to the defined label. For example, using '% {DATA: this_is_the_name}' pattern, you could write the following tag:

mylabel: '{{.this_is_the_name}}'

Then, if the field described by the% {DATA} pattern was 'myvalue', then the metric would be labeled with the following: '{mylabel = "myvalue"}'

Let's look at an example:

The following log line is given:

7/30/2016 2:37:03 PM adam 1.5

And the following metric rule in grok config:

metrics:

- type: counter

name: grok_example_lines_total

help: Example counter metric with labels.

match: '%{DATE} %{TIME} %{USER:user} %{NUMBER}'

labels:

user: '{{.user}}'

The metric will be named 'grok_example_lines_total' . The metrics will be:

# HELP Example counter metric with labels.

# TYPE grok_example_lines_total counter

grok_example_lines_total{user="adam"} 1

Determine the value of a metric

For a counter-type metric, you do not need to determine the value of the metric, because it will count the number of matching logs found. In contrast, for all other types, you have to specify what is considered a value. This should be specified in the 'value' section, where a named grok pattern from the match section must be referenced in the same way as Go templates as defined in the tags. Eg the following two log lines are given:

7/30/2016 2:37:03 PM adam 1 7/30/2016 2:37:03 PM Adam 5

And for this we define the following histogram, which consists of two buckets, buckets 1 and 2:

metrics:

- type: histogram

name: grok_example_lines

help: Example counter metric with labels.

match: '%{DATE} %{TIME} %{USER:user} %{NUMBER:val}'

buckets: [1,2]

value: '{{.val}}'

labels:

user: '{{.user}}'

This will result in the following metrics:

# HELP Example counter metric with labels.

# TYPE grok_example_lines histogram

grok_example_lines_bucket{user="adam", le="1"} 1

grok_example_lines_bucket{user="adam", le="2"} 1

grok_example_lines_bucket{user="adam", le="+Inf"} 2

grok_example_lines_count{user="adam"} 2

grok_example_lines_sum

Functions

You can apply functions to the values of the metric (values) and to the tags. Functions must be grok-exporter version '0.2.7' or later. String manipulation functions and arithmetic functions can also be used. The following two arguments arithmetic functions are supported:

- add

- subtract

- multiply

- divide

{{FUNCTION_NAME ATTR1 ATTR2}} where ATTR1 and ATTR2 can be either a value derived from a pattern or a natural number. The values obtained from the pattern should be written in the same way. Eg if we use the multiply function in the example above:

value: "{{multiply .val 1000}}"Then the metric changes to:

# HELP Example counter metric with labels.

# TYPE grok_example_lines histogram

grok_example_lines_bucket {user = "adam", le = "1"} 0

grok_example_lines_bucket {user = "adam", le = "2"} 0

grok_example_lines_bucket {user = "adam", le = "+ Inf"} 2

...

Since the two values will change to 1000 and 5000 respectively, both will fall into the infinite category.

Creating a grok config file

You need to compile a grok pattern that fits in the HAproxy access-log lines and can extract all the attributes that are important to us:

- total time to respond

- haproxy instance id

- openshfit service namespace

- pod name

Example haproxy access-log:

Aug 6 20:53:30 192.168.122.223 haproxy [39]: 192.168.42.1:50708 [06 / Aug / 2019: 20: 53: 30.267] public be_edge_http: mynamespace: test-app-service / pod: test-app- 57574c8466-qbtg8: test-app-service: 172.17.0.12: 8080 1/0/0/321/321 200 135 - - --NI 2/2/0/1/0 0/0 "GET / test / slowresponse / 1 HTTP / 1.1 "

In the config.yml file, we will define a histogram that contains the response time for full requests. This is a classic histogram, usually containing the following buckets (in seconds):

[0.1, 0.2, 0.4, 1, 3, 8, 20, 60, 120]

Response time metrics by convention are called '<prefix> _http_request_duration_seconds'

config.yml

global:

config_version: 2

input:

type: file

path: /var/log/messages

readall: true

grok:

patterns_dir: ./patterns

metrics:

- type: histogram

name: haproxy_http_request_duration_seconds

help: The request durations of the applications running in openshift that have route defined.

match: '%{SYSLOGTIMESTAMP:timestamp} %{DATA:Aloha_name} %{DATA:haproxy_process}: %{DATA:client_ip}:%{INT:client_port} \[%{HAPROXYDATE:accept_date}\] %{DATA:frontend_name} %{DATA}:%{DATA:namespace}:%{DATA:service}/pod:%{DATA:pod_name}:%{DATA} %{INT:Tq}/%{INT:Tw}/%{INT:Tc}/%{INT:Tr}/%{INT:Tt} %{INT:http_status_code} %{NOTSPACE:bytes_read} %{DATA} %{DATA} %{DATA} %{INT:actconn}/%{INT:feconn}/%{INT:beconn}/%{INT:srvconn}/%{NOTSPACE:retries} %{INT:srv_queue}/%{INT:backend_queue} "%{WORD:Method} %{URIPATHPARAM:request} HTTP/%{NUMBER:http_version}"'

value: "{{divide .Tr 1000}}"

buckets: [0.1, 0.2, 0.4, 1, 3, 8, 20, 60, 120]

labels:

haproxy: '{{.haproxy_process}}'

namespace: '{{.namespace}}'

service: '{{.service}}'

pod_name: '{{.pod_name}}'

server:

port: 9144

- type:file -> read logs from file

- path: /var/log/messages -> The rsyslog server writes logs to /var/log/messages by default

- readall: true -> always reads the entire log file. This should only be used for testing, in a live environment, and should always be set to false.

- patterns_dir: ./patterns -> Pattern definitions can be found in the docker image

-

value: "{{divide .Tt 1000}}"The serving time in the HAproxy log is in milliseconds and must be converted to seconds. - port: 9144 -> This port will provide the /metrics endpoint.

Warning

do not forget to set the value of 'readall' 'to' false in a live environment as this will greatly reduce efficiency

Online grok tester

There are several online grok testing tools. These can be used to compile the required grok pattern very effectively: https://grokdebug.herokuapp.com/

making docker image

The grok-exporter docker image is available on the docker hub in several versions. The only problem with them is that they do not include the rsyslog server, what we need is for HAproxy to send logs directly to the grok-exporter podokank.

docker-hub link: https://hub.docker.com/r/palobo/grok_exporter

The second problem is that they are based on an ubuntu base image, where it is very difficult to get rsyslog to log on to stdout, which requires the Kubernetets centralized log collector to receive HAproxy logs, so both monitoring and centralized logging can be served. Thousands of the original Dockerfile will be ported to 'centos 7' and will be supplemented with the installation of the rsyslog server.

All necessary files are available on git-hub: https://github.com/berkiadam/haproxy-metrics/tree/master/grok-exporter-centos

I also created an ubuntu based solution, which is an extension of the original docker-hub solution, which can also be found on git-hub in the 'grok-exporter-ubuntu folder' . For the rest of the howot, we will always use the cent version.

Dockerfile

We will start with 'palobo / grok_exporter' Dockerfile, but will complement it with the rsyslog installation and port it to centos: https://github.com/berkiadam/haproxy-metrics/tree/master/grok- CentOS-exporter

➲File:Grok-exporter-docker-build.zip

Dockerfile

FROM centos:7

LABEL Maintainer="Adam Berki <https://github.com/berkiadam/>"

LABEL Name="grok_exporter"

LABEL Version="0.2.8"

ENV PID_DIR /tmp/pidDir

ENV GROK_ARCH="grok_exporter-0.2.8.linux-amd64"

ENV GROK_VERSION="v0.2.8"

USER root

RUN yum -y install rsyslog wget unzip && \

yum clean all && \

echo "" > /etc/rsyslog.d/listen.conf && \

mkdir -p ${PID_DIR} && \

chmod 777 ${PID_DIR} \

&& wget https://github.com/fstab/grok_exporter/releases/download/$GROK_VERSION/$GROK_ARCH.zip \

&& unzip $GROK_ARCH.zip \

&& mv $GROK_ARCH /grok \

&& rm $GROK_ARCH.zip \

&& yum -y remove wget unzip \

&& rm -fr /var/lib/apt/lists/*

RUN mkdir -p /etc/grok_exporter && ln -sf /etc/grok_exporter/config.yml /grok/

COPY rsyslog.conf /etc/rsyslog.conf

EXPOSE 514/tcp 514/udp 9144/tcp

WORKDIR /grok

CMD sh -c "nohup /usr/sbin/rsyslogd -i ${PID_DIR}/pid -n &" && ./grok_exporter -config /grok/config.yml

Note

It is important that we use at least version 0.2.7 of grok-exporter, the function handling first appeared

The rsyslog.conf file must be accompanied by the following, which allows you to receive logos on port 514 on both UDP and TCP (see zip above for details), and that write all logs to stdout and /var/log/messages.

$ModLoad omstdout.so # provides UDP syslog reception module(load="imudp") input(type="imudp" port="514") # provides TCP syslog reception module(load="imtcp") input(type="imtcp" port="514") ... *.* :omstdout: # send everything to stdout *.*;mail.none;authpriv.none;cron.none /var/log/messages

Local build and local test

First, we will build the docker image with the local docker daemon so that we can run it locally for testing. Later we will build this on the minishfit VM, since we will only be able to upload it to the minishfit docker registry from there. Since we will be uploading the image to a remote (not local) docker repository, it is important to follow the naming conventions:

<repo URL>: <repo port> / <namespace> / <image-name>: <tag> </ Pre> We will upload the image to the docker registry running on the minishift, so it is important to specify the address and port of the minishfit-docker registry and the OpenShift namespace where the image will be placed. <Pre> # docker build -t 172.30.1.1:5000/default/grok_exporter:1.1.0.

The resulting image can be tested by running a native, local docker. Create a haproxy test log file ( 'haproxy.log' ) with the following content in it. This will be processed by the grok-exporter, as if it had been provided by haproxy.

Aug 6 20:53:30 192.168.122.223 haproxy[39]: 192.168.42.1:50708 [06/Aug/2019:20:53:30.267] public be_edge_http:mynamespace:test-app-service/pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.12:8080 1/0/0/321/321 200 135 - - --NI 2/2/0/1/0 0/0 "GET /test/slowresponse/1 HTTP/1.1" Aug 6 20:53:30 192.168.122.223 haproxy[39]: 192.168.42.1:50708 [06/Aug/2019:20:53:30.588] public be_edge_http:mynamespace:test-app-service/pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.12:8080 53/0/0/11/63 404 539 - - --VN 2/2/0/1/0 0/0 "GET /favicon.ico HTTP/1.1"

Put the grok file 'config.yml' created above in the same folder. In the config.yml file, change the input.path to '/grok/haproxy.log' so that the grok-exporter processes our test log file. Then start it with a 'docker run' 'command:

# docker run -d -p 9144: 9144 -p 514: 514 -v $ (pwd) /config.yml:/etc/grok_exporter/config.yml -v $ (pwd) /haproxy.log:/grok/haproxy. log --name grok 172.30.1.1:5000/default/grok_exporter:1.1.0

After starting, check in log that grok and rsyslog are actually started:

# docker logs grok * Starting enhanced syslogd rsyslogd ... done. Starting server is http: // 7854f3a9fe76: 9144 / metrics

Metrics are then available in the browser at http: // localhost: 9144 / metrics:

...

# HELP haproxy_http_request_duration_seconds_bucket The request duration of the applications running in openshift that have route defined.

# TYPE haproxy_http_request_duration_seconds_bucket histogram

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="0.1"} 1

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="0.2"} 1

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="0.4"} 2

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="1"} 2

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="3"} 2

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="8"} 2

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="20"} 2

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="60"} 2

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="120"} 2

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="+Inf"} 2

haproxy_http_request_duration_seconds_bucket_sum{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service"} 0.384

haproxy_http_request_duration_seconds_bucket_count{haproxy="haproxy[39]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service"} 2

As a second step, verify that the 'rsyslog' 'running in the docker container can receive these remote log messages. To do this, first enter the container and look for the /var/log/messages file:

# docker exec -it grok /bin/bash root@a27e5b5f2de7:/grok# tail -f /var/log/messages Aug 8 14:44:37 a27e5b5f2de7 rsyslogd: [origin software="rsyslogd" swVersion="8.16.0" x-pid="21" x-info="http://www.rsyslog.com"] start Aug 8 14:44:37 a27e5b5f2de7 rsyslogd-2039: Could not open output pipe '/dev/xconsole':: Permission denied [v8.16.0 try http://www.rsyslog.com/e/2039 ] Aug 8 14:44:37 a27e5b5f2de7 rsyslogd: rsyslogd's groupid changed to 107 Aug 8 14:44:37 a27e5b5f2de7 rsyslogd: rsyslogd's userid changed to 105 Aug 8 14:44:38 a27e5b5f2de7 rsyslogd-2007: action 'action 9' suspended, next retry is Thu Aug 8 14:45:08 2019 [v8.16.0 try http://www.rsyslog.com/e/2007 ]

Most az anya gépről a logger paranccsal küldjünk egy log üzenetet a konténerben futó rsyslog szervernek az 514-es porton:

# logger -n localhost -P 514 -T "this is the message"

(T=TCP)

Ekkor a log meg kell jelenjen a syslog fájlban:

Aug 8 16:54:25 dell adam this is the message

Törölhetjük a lokális docker konténert.

Távoli build

Fel szeretnénk tölteni az elkészült docker image-t a minishfit saját registry-ébe. Ehhez az image-t a minishfit VM lokális docker démonjával kell build-elni, mert csak onnan ehet hozzáférni a minishfit registry-hez.

Részletek itt: ➲Image push a minishift docker registriy-be

Ahhoz hogy az admin' user-nek legyen joga feltölteni az image-t a minisfhit registry-be a default névtérbe, ahol a router is fut, szüksége hogy megkapja a cluster-admin jogot. Fontos, hogy -u system:admin -al lépjünk be ne csupán oc login-al, mert akkor nem lesz jogunk az oc adm parancsot kiadni. Ugyan így fogunk hivatkozni a user-re is az --as paraméterben.

# oc login -u system:admin # oc adm policy add-cluster-role-to-user cluster-admin admin --as=system:admin cluster role "cluster-admin" added: "admin"

Note

Ha ezt a hibát kapjuk Error from server (NotFound): the server could not find the requested resource, ez azt jelenti, hogy az oc kliens programunk régebbi mint a OpenShift verzió

Irányítsuk át a lokális docker kliensünket a minisfhit VM-en futó docker démonra, majd jelentkezzünk be a minishift docker registry-be:

# minishift docker-env # eval $(minishift docker-env) # oc login Username: admin Password: <admin> # docker login -u admin -p $(oc whoami -t) $(minishift openshift registry) Login Succeeded

Build-eljük le a minishfit VM-en is:

# docker build -t 172.30.1.1:5000/default/grok_exporter:1.1.0 .

Lépjünk be a minisfhit docker registry-be majd adjuk ki a push parancsot.

# docker push 172.30.1.1:5000/default/grok_exporter:1.1.0

Kubernetes objektumok

A grok-exporter-hez létre fogunk hozni egy serviceAccount-ot, egy deployment-et, egy service-t és egy comifMap-et ahol a grok-exporter konfigurációját fogjuk tárolni. Ezen felül módosítani fogjuk az anyuid nevű SecurityContextConstraints objektumot, mivel az rsyslog szerver miatt a grok-exporter konténernek privilegizált módban kell futnia.

- haproxy-exporter service account

- cm-haproxy-exporter.yaml

- deployment-haproxy-exporter.yaml

- svc-haproxy-exporter-service.yaml

- scc-anyuid.yaml

A teljes konfigurációt itt tölthetjük le: File:Haproxy-kubernetes-objects.zip, vagy megtalálható az alábbi git repository-ban: https://github.com/berkiadam/haproxy-metrics

ServiceAccount létrehozása

A haproxy-exporter-nek szüksége van egy saját serviceAccount-ra, amire engedélyezni fogjuk a privilegizált (root) konténer futtatást. Erre az rsyslog szervernek van szüksége.

# kubectl create serviceaccount haproxy-exporter -n default serviceaccount/haproxy-exporter created

Ennek a hatáséra a következő serviceAccount definíció jött létre:

apiVersion: v1

imagePullSecrets:

- name: haproxy-exporter-dockercfg-67x4j

kind: ServiceAccount

metadata:

creationTimestamp: "2019-08-10T12:27:52Z"

name: haproxy-exporter

namespace: default

resourceVersion: "837500"

selfLink: /api/v1/namespaces/default/serviceaccounts/haproxy-exporter

uid: 45a82935-bb6a-11e9-9175-525400efb4ec

secrets:

- name: haproxy-exporter-token-8svkx

- name: haproxy-exporter-dockercfg-67x4j

Objektumok definiálása

cm-haproxy-exporter.yaml

apiVersion: v1

data:

config.yml: |

...grok-exporter config.yml...

kind: ConfigMap

metadata:

name: haproxy-exporter

namespace: default

deployment-haproxy-exporter.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

...

name: haproxy-exporter

namespace: default

spec:

...

template:

...

spec:

containers:

- image: '172.30.1.1:5000/default/grok_exporter:1.1.0'

imagePullPolicy: IfNotPresent

name: grok-exporter

ports:

- containerPort: 9144

protocol: TCP

- containerPort: 514

protocol: TCP

volumeMounts:

- mountPath: /etc/grok_exporter/

name: config-volume

- mountPath: /var/log

name: log-dir

...

volumes:

- name: config-volume

configMap:

defaultMode: 420

name: haproxy-exporter

- name: log-dir

emptyDir: {}

svc-haproxy-exporter-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

run: haproxy-exporter

name: haproxy-exporter-service

namespace: default

spec:

ports:

- name: port-1

port: 9144

protocol: TCP

targetPort: 9144

- name: port-2

port: 514

protocol: TCP

targetPort: 514

- name: port-3

port: 514

protocol: UDP

targetPort: 514

selector:

run: haproxy-exporter

sessionAffinity: None

type: ClusterIP

SecurityContextConstraints

A grok-exporter-ben lévő rsyslog szerver miatt fontos, hogy a konténer privilegizált üzemmódban fusson. Ehhez az anyuid nevű SCC-be fel kell venni a haproxy-exporter-hez tartozó serviceAcccount-t, hogy engedélyezzük a root nevében futtatást. Tehát nincs szükség a privileged SCC-re, mert a konténer elve root-ként szeretne indulni. Más különben az rsyslog nem lesz képes létrehozni a socket-eket.

Warning

Az SCC-k kezeléshez nem elég a developer user mynamespace-re kapott admin rolebindg-ja. Ehhez admin-ként kell bejelentkezni: oc login -u system:admin

Listázzuk ki a SCC-ket:

# kubectl get SecurityContextConstraints NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP PRIORITY READONLYROOTFS VOLUMES anyuid false [] MustRunAs RunAsAny RunAsAny RunAsAny 10 false [configMap downwardAPI emptyDir persistentVolumeClaim ... privileged true [*] RunAsAny RunAsAny RunAsAny RunAsAny <none> false [*] ...

Az anyuid SCC-hez a users szekcióban kell hozzáadni a serviceAccount-ot az alábbi formában:

- system:serviceaccount:<névtér>:<serviceAccount>

scc-anyuid.yaml

kind: SecurityContextConstraints

metadata:

name: anyuid

...

users:

- system:serviceaccount:default:haproxy-exporter

...

Mivel ez már egy létező scc és csak egy apró módosítást akarunk rajta eszközölni, ezért helyben is szerkeszthetjük:

# oc edit scc anyuid securitycontextconstraints.security.openshift.io/anyuid edited

objektumok létrehozása

# kubectl apply -f cm-haproxy-exporter.yaml configmap/haproxy-exporter created

# kubectl apply -f deployment-haproxy-exporter.yaml deployment.apps/haproxy-exporter created # kubectl rollout status deployment haproxy-exporter -n default deployment "haproxy-exporter" successfully rolled out

# kubectl apply -f svc-haproxy-exporter-service.yaml

Tesztelés

Keressük meg a haproxy-exporter pod-ot majd nézzük meg a pod logját:

# kubectl logs haproxy-exporter-744d84f5df-9fj9m -n default * Starting enhanced syslogd rsyslogd ...done. Starting server on http://haproxy-exporter-744d84f5df-9fj9m:9144/metrics

Majd lépjünk be a konténerbe és teszteljük re az rsyslog működését:

# kubectl exec -it haproxy-exporter-647d7dfcdf-gbgrg /bin/bash -n default

Majd a logger paranccsal küldjünk egy log üzenetet az rsyslog-nak.

logger -n localhost -P 514 -T "this is the message"

Most listázzuk ki a /var/log/messages mappa tartalmát:

# cat messages Aug 28 19:16:09 localhost root: this is the message

Lépjünk ki a konténerből, és kérjük le megint a pod logjait, hogy megnézzük, hogy az stdout-ra is kirakta e a logot:

# kubectl logs haproxy-exporter-647d7dfcdf-gbgrg -n default Starting server on http://haproxy-exporter-647d7dfcdf-gbgrg:9144/metrics 2019-08-28T19:16:09+00:00 localhost root: this is the message

HAproxy konfiguráció

Környezeti változók beállítása

A HAproxy-nak be fogjuk állítani környezeti változónk keresztül a haporxy-exporter pod-ban futó rsyslog szerver címét. Ehhez első lépésben listázzuk a haproxy-exporter service-t.

# kubectl get svc -n default NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE haproxy-exporter-service ClusterIP 172.30.213.183 <none> 9144/TCP,514/TCP,514/UDP 15s ..

A HAproxy az rsyslog szerver címét a ROUTER_SYSLOG_ADDRESS nevű környezeti változóban tárolja (Deployment része). Ezt futásidőben át tudjuk írni az oc set env paranccsal. A változó átírása után a pod magától újra fog indulni.

# oc set env dc/myrouter ROUTER_SYSLOG_ADDRESS=172.30.213.183 -n default deploymentconfig.apps.openshift.io/myrouter updated

Note

Minishift-en a router konténerben nem működik a service-ek nevére a névfeloldás, mivel nem a Kubernetes klaszter DNS szerver címe van beállítva, hanem a minishfit VM. Ezért nem tehetünk mást, mint hogy a service IP címét adjuk meg a neve helyett. OpenShift környezetben a service nevét adjuk meg

Majd második lépésben állítsuk át debug-ra a logszintet a HAproxy-ban, mert csak debug szinten van access-log.

# oc set env dc/myrouter ROUTER_LOG_LEVEL=debug -n default deploymentconfig.apps.openshift.io/myrouter updated

Warning

Teljesítmény tesztel meg kell vizsgálni hogy mekkora plusz terhelést jelent a haproxy-nak ha debug módban fut

A fenti két környezeti változó módosításának a hatására a router konténerben a /var/lib/haproxy/conf/haproxy.config fájlban a HAproxy konfigurációja az alábbira változott:

# kubectl exec -it myrouter-5-hf5cs /bin/bash -n default $ cat /var/lib/haproxy/conf/haproxy.config global .. log 172.30.82.232 local1 debug

A lényeg, hogy megjelent a log paraméternél a haproxy-exporter service címe és a debug log szint.

rsyslog szerver tesztelése

Generáljunk egy kis forgalmat a haproxy-n keresztül, majd lépjünk vissza a haproxy-exporter konténerbe, és listázzuk a messages fájl tartalmát.

# kubectl exec -it haproxy-exporter-744d84f5df-9fj9m /bin/bash -n default # # tail -f /var/log/messages Aug 9 12:52:17 192.168.122.223 haproxy[24]: Proxy fe_sni stopped (FE: 0 conns, BE: 0 conns). Aug 9 12:52:17 192.168.122.223 haproxy[24]: Proxy be_no_sni stopped (FE: 0 conns, BE: 0 conns). Aug 9 12:52:17 192.168.122.223 haproxy[24]: Proxy fe_no_sni stopped (FE: 0 conns, BE: 0 conns). Aug 9 12:52:17 192.168.122.223 haproxy[24]: Proxy openshift_default stopped (FE: 0 conns, BE: 1 conns). Aug 9 12:52:17 192.168.122.223 haproxy[24]: Proxy be_edge_http:dsp:nginx-route stopped (FE: 0 conns, BE: 0 conns). Aug 9 12:52:17 192.168.122.223 haproxy[24]: Proxy be_http:mynamespace:prometheus-alertmanager-jv69s stopped (FE: 0 conns, BE: 0 conns). Aug 9 12:52:17 192.168.122.223 haproxy[24]: Proxy be_http:mynamespace:prometheus-server-2z6zc stopped (FE: 0 conns, BE: 0 conns). Aug 9 12:52:17 192.168.122.223 haproxy[24]: Proxy be_edge_http:mynamespace:test-app-service stopped (FE: 0 conns, BE: 0 conns). Aug 9 12:52:17 192.168.122.223 haproxy[24]: Proxy be_edge_http:myproject:nginx-route stopped (FE: 0 conns, BE: 0 conns). Aug 9 12:52:17 192.168.122.223 haproxy[32]: 127.0.0.1:43720 [09/Aug/2019:12:52:17.361] public openshift_default/<NOSRV> 1/-1/-1/-1/0 503 3278 - - SC-- 1/1/0/0/0 0/0 "HEAD / HTTP/1.1"

Ha a logjait megnézzük a haproxy-exporter pod-nak, ugyan ezt kell ássuk.

http://test-app-service-mynamespace.192.168.42.185.nip.io/test/slowresponse/3000

... Aug 9 12:57:21 192.168.122.223 haproxy[32]: 192.168.42.1:48266 [09/Aug/2019:12:57:20.636] public be_edge_http:mynamespace:test-app-service/pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.17:8080 1/0/12/428/440 200 135 - - --II 2/2/0/1/0 0/0 "GET /test/slowresponse/1 HTTP/1.1" Aug 9 12:57:28 192.168.122.223 haproxy[32]: 192.168.42.1:48266 [09/Aug/2019:12:57:21.075] public be_edge_http:mynamespace:test-app-service/pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.17:8080 4334/0/0/3021/7354 200 135 - - --VN 2/2/0/1/0 0/0 "GET /test/slowresponse/3000 HTTP/1.1" Aug 9 12:57:28 192.168.122.223 haproxy[32]: 192.168.42.1:48266 [09/Aug/2019:12:57:28.430] public be_edge_http:mynamespace:test-app-service/pod:test-app-57574c8466-qbtg8:test-app-service:172.17.0.17:8080 90/0/0/100/189 404 539 - - --VN 2/2/0/1/0 0/0 "GET /favicon.ico HTTP/1.1" Aug 9 12:57:35 192.168.122.223 haproxy[32]: 192.168.42.1:48268 [09/Aug/2019:12:57:20.648] public public/<NOSRV> -1/-1/-1/-1/15002 408 212 - - cR-- 2/2/0/0/0 0/0 "<BADREQ>"

grok-exporter tesztelése

Kérjük le a grok-exporter metrikákat a http://<pod IP>:9144/metrics címen. Vagy a haproxy-exporter pod-ban localhost hívással, vagy bármelyik másik pod-ban a haporxy-exporter pod IP címét felhasználva. Az alábbi példában a test-app-ba lépek be. Látnunk kell a metrikák között a haproxy_http_request_duration_seconds_bucket histogramot.

# kubectl exec -it test-app-57574c8466-qbtg8 /bin/bash -n mynamespace

$

$ curl http://172.30.213.183:9144/metrics

...

# HELP haproxy_http_request_duration_seconds The request durations of the applications running in openshift that have route defined.

# TYPE haproxy_http_request_duration_seconds histogram

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="0.1"} 0

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="0.2"} 1

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="0.4"} 1

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="1"} 2

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="3"} 2

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="8"} 3

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="20"} 3

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="60"} 3

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="120"} 3

haproxy_http_request_duration_seconds_bucket{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service",le="+Inf"} 3

haproxy_http_request_duration_seconds_sum{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service"} 7.9830000000000005

haproxy_http_request_duration_seconds_count{haproxy="haproxy[32]",namespace="mynamespace",pod_name="test-app-57574c8466-qbtg8",service="test-app-service"} 3

Prometheus beállítások

Statikus konfiguráció

- job_name: grok-exporter

scrape_interval: 5s

metrics_path: /metrics

static_configs:

- targets: ['grok-exporter-service.default:9144']

Pod szintű adatgyűjtés

Azt szeretnénk, hogy a haproxy-exporter podok skálázhatóak legyenek. Ehhez az kell, hogy a prometheus ne a service-en keresztül kérje le a metrikát (mert akkor a service loadbalancing-ot csinál) hanem közvetlenül a pod-okat szólítsa meg. Ehhez az kell, hogy a prometheus a Kubernetes API-n keresztül kérje le a haproxy-epxporter-hez tartozó Endpoint-ot, ami tartalmazza a service-hez tartozó podok ip címének a listáját. Ehhez a prometheus kubernetes_sd_configs elemét fogjuk használni. (Ennek előfeltétele, hogy a Prometheus képes legyen kommunikálni a Kubernetes API-val. Részleteket lásd itt: Prometheus_on_Kubernetes)

A kubernetes_sd_configs használatakor mindig egy adott Kubernetes objektum listát kérünk le a szerverről (node, service, endpoints, pod), majd a kapott listából megkeressük azt az erőforrást, amiből be akarjuk gyűjteni a metrikákat. Ezt úgy tesszük meg hogy a relabel_configs szekcióban majd szűrőfeltételeket írunk föl az adott Kubernetes resource címkéire. Jelen esetben a haproxy-exporter-hez tartozó Endpoint-ot akarjuk megtalálni, mert az alapján a Prometheus meg tudja találni az összes a service-hez tartozó pod-ot. Tehát a címék alapján meg akarjuk majd találni egyrészt azt az endpoint-ot, amit haproxy-exporter-service-nak hívnak, ezen felül van egy metrics portja, amin keresztül a Prometheus képes lekérni a metrikákat. Az alapértelmezett URL a /metrics, tehát ezt külön nem kell definiálni, a grok-exporter is ezt használja.

# kubectl get Endpoints haproxy-exporter-service -n default -o yaml

apiVersion: v1

kind: Endpoints

metadata:

name: haproxy-exporter-service

...

ports:

- name: log-udp

port: 514

protocol: UDP

- name: metrics

port: 9144

protocol: TCP

- name: log-tcp

port: 514

protocol: TCP

Két címkét keresünk az Endpoints listában:

- __meta_kubernetes_endpoint_port_name: metrics -> 9144

- __meta_kubernetes_service_name: haproxy-exporter-service

A proetheus.yaml-t, vagyis a prometheus.yaml-t leíró config-map-et az alábbiakkal kell kiegészíteni:

- job_name: haproxy-exporter

scheme: http

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

server_name: router.default.svc

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: endpoints

namespaces:

names:

- default

relabel_configs:

- source_labels: [__meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: haproxy-exporter-service;metrics

Töltsük újra a configMap-et:

# kubectl apply -f cm-prometheus-server-haproxy-full.yaml

Majd várjuk meg, hogy a Prometheus újra olvassa a konfigurációs fájlt:

# kubectl logs -f -c prometheus-server prometheus-server-75c9d576c9-gjlcr -n mynamespace ... level=info ts=2019-07-22T20:25:36.016Z caller=main.go:730 msg="Loading configuration file" filename=/etc/config/prometheus.yml

Majd a http://mon.192.168.42.185.nip.io/targets képernyőn ellenőrizzük, hogy eléri e a Prometheus a haproxy-exporter target-et:

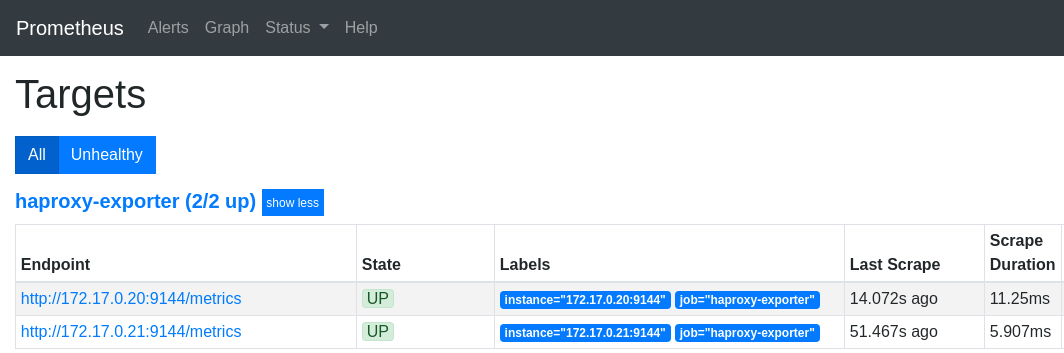

haproxy-exporter skálázása

# kubectl scale deployment haproxy-exporter --replicas=2 -n default deployment.extensions/haproxy-exporter scaled

# kubectl get deployment haproxy-exporter -n default NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE haproxy-exporter 2 2 2 2 3h

Metrika fajták

haproxy_http_request_duration_seconds_bucket

type: histogram

haproxy_http_request_duration_seconds_bucket_count

type: counter

Az összes darabszáma az adott histogramba eső request-ek számának

haproxy_http_request_duration_seconds_count{haproxy="haproxy[39]",job="haproxy-exporter",namespace="mynamespace",pod_name="test-app",service="test-app-service"} 5

haproxy_http_request_duration_seconds_sum

type: counter

A válaszidők idejének összege az adott hisztogramban. Az előző példa alapján összesen 5 kérés jött, és a kiszolgálási idő összeadva 13 s volt.

haproxy_http_request_duration_seconds_sum{haproxy="haproxy[39]",job="haproxy-exporter",namespace="mynamespace",pod_name="test-app",service="test-app-service"} 13.663

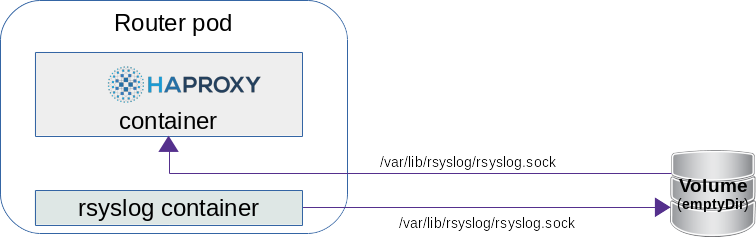

OpenShift router + rsyslog

OpenShift 3.11-től kezdődően lehet olyan router-t definiálni, hogy az OpenShfit automatikusan elindít egy side car rsyslog konténert a router pod-ban és be is állítja, hogy a HAproxy egy socket-en keresztül (emptyDir volume) elküldje a logokat az rsyslog szervernek, ami az stdout-ra írja azokat alapértelmezetten. Az rsyslog konfigurációja egy configMap-ban van.

A router-t syslogserverrel a --extended-logging kapcsolóval hozhatjuk létre az oc adm router paranccsal.

# oc adm router myrouter --extended-logging -n default

info: password for stats user admin has been set to O6S6Ao3wTX

--> Creating router myrouter ...

configmap "rsyslog-config" created

warning: serviceaccounts "router" already exists

clusterrolebinding.authorization.openshift.io "router-myrouter-role" created

deploymentconfig.apps.openshift.io "myrouter" created

service "myrouter" created

--> Success

Kapcsoljuk be a debug szintet a HAproxy-ban:

# oc set env dc/myrouter ROUTER_LOG_LEVEL=debug -n default deploymentconfig.apps.openshift.io/myrouter updated

Két konténer van az új router pod-ban:

# kubectl describe pod/myrouter-2-bps5v -n default

..

Containers:

router:

Image: openshift/origin-haproxy-router:v3.11.0

Mounts:

/var/lib/rsyslog from rsyslog-socket (rw)

...

syslog:

Image: openshift/origin-haproxy-router:v3.11.0

Mounts:

/etc/rsyslog from rsyslog-config (rw)

/var/lib/rsyslog from rsyslog-socket (rw)

...

rsyslog-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: rsyslog-config

Optional: false

rsyslog-socket:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

SizeLimit: <unset>

Láthatjuk, hogy mind két konténerbe mount-olva van /var/lib/rsyslog/ mappa. A HAproxy konfigurációs fájljában ide fogja létrehozni az rsyslog.sock fájlt.

router konténer

Ha belépünk a router konténerbe, láthatjuk, hogy már fel is nyalta a konfiguráció:

# kubectl exec -it myrouter-2-bps5v /bin/bash -n default -c router bash-4.2$ cat /var/lib/haproxy/conf/haproxy.config global ... log /var/lib/rsyslog/rsyslog.sock local1 debug ... defaults ... option httplog --> Enable logging of HTTP request, session state and timers ... backend be_edge_http:mynamespace:test-app-service

rsyslog konténer

# kubectl exec -it myrouter-2-bps5v /bin/bash -n default -c syslog $ cat /etc/rsyslog/rsyslog.conf $ModLoad imuxsock $SystemLogSocketName /var/lib/rsyslog/rsyslog.sock $ModLoad omstdout.so *.* :omstdout:

Ha át akarjuk konfigurálni az rsyslog-ot hogy küldje el a logokat pl a logstash-nek, akkor csak a configMap-et kell átírni. Alapértelmezetten csak az stdout-ra írja amit kap.

# kubectl get cm rsyslog-config -n default -o yaml

apiVersion: v1

data:

rsyslog.conf: |

$ModLoad imuxsock

$SystemLogSocketName /var/lib/rsyslog/rsyslog.sock

$ModLoad omstdout.so

*.* :omstdout:

kind: ConfigMap

metadata:

name: rsyslog-config

namespace: default

HAproxy logok nézegetése

# kubectl logs -f myrouter-2-bps5v -c syslog