Difference between revisions of "Cassandra -NoSQL database"

(→Database Normalization) |

(→Denormalization) |

||

| Line 130: | Line 130: | ||

==Denormalization== | ==Denormalization== | ||

| + | |||

| + | Denormalization is a strategy used on a previously-normalized database to increase performance. In computing, denormalization is the process of trying to improve the read performance of a database, at the expense of losing some write performance, by adding redundant copies of data or by grouping data. It is often motivated by performance or scalability in relational database software needing to carry out very large numbers of read operations. Denormalization should not be confused with Unnormalized form. Databases/tables must first be normalized to efficiently denormalize them. | ||

| + | |||

| + | A normalized design will often "store" different but related pieces of information in separate logical tables (called relations). If these relations are stored physically as separate disk files, completing a database query that draws information from several relations (a join operation) can be slow. If many relations are joined, it may be prohibitively slow. There are two strategies for dealing with this. The preferred method is to keep the logical design normalized, but allow the database management system (DBMS) to store additional redundant information on disk to optimise query response. In this case it is the DBMS software's responsibility to ensure that any redundant copies are kept consistent. This method is often implemented in SQL as indexed views (Microsoft SQL Server) or materialised views (Oracle, PostgreSQL). A view may, among other factors, represent information in a format convenient for querying, and the index ensures that queries against the view are optimised physically. | ||

| + | |||

| + | The more common approach is to denormalize the logical data design. With care this can achieve a similar improvement in query response, but at a cost—it is now the database | ||

=Bevezető= | =Bevezető= | ||

Revision as of 10:39, 7 October 2018

Contents

Fontos fogalmak

Edgar F. Codd

https://en.wikipedia.org/wiki/Edgar_F._Codd

Edgar Frank "Ted" Codd (19 August 1923 – 18 April 2003) was an English computer scientist who, while working for IBM, invented the relational model for database management, the theoretical basis for relational databases and relational database management systems. He made other valuable contributions to computer science, but the relational model, a very influential general theory of data management, remains his most mentioned, analyzed and celebrated achievement

Segéd fogalmak

Referential integrity

Referential integrity is a property of data stating references within it are valid. In the context of relational databases, it requires every value of one attribute (column) of a relation (table) to exist as a value of another attribute (column) in a different (or the same) relation (table).[1]

For referential integrity to hold in a relational database, any column in a base table that is declared a foreign key can contain either a null value, or only values from a parent table's primary key or a candidate key. In other words, when a foreign key value is used it must reference a valid, existing primary key in the parent table. For instance, deleting a record that contains a value referred to by a foreign key in another table would break referential integrity.

ACID (Atomicity, Consistency, Isolation, Durability)

Atomicity (database systems)

In database systems, atomicity is one of the ACID (Atomicity, Consistency, Isolation, Durability) transaction properties. An atomic transaction is an indivisible and irreducible series of database operations such that either all occur, or nothing occurs.[1] A guarantee of atomicity prevents updates to the database occurring only partially, which can cause greater problems than rejecting the whole series outright

Consistency

Consistency in database systems refers to the requirement that any given database transaction must change affected data only in allowed ways. Any data written to the database must be valid according to all defined rules, including constraints, cascades, triggers, and any combination thereof.

Isolation

Isolation is typically defined at database level as a property that defines how/when the changes made by one operation become visible to other.

Durability

In database systems, durability is the ACID property which guarantees that transactions that have committed will survive permanently.

Normal forms

Codd introduced the concept of normalization and what is now known as the first normal form (1NF) in 1970.[4] Codd went on to define the second normal form (2NF) and third normal form (3NF) in 1971,[5] and Codd and Raymond F. Boyce defined the Boyce-Codd normal form (BCNF) in 1974.[6]

Informally, a relational database relation is often described as "normalized" if it meets third normal form.[7] Most 3NF relations are free of insertion, update, and deletion anomalies.

First normal form

First normal form is an essential property of a relation in a relational database. Database normalization is the process of representing a database in terms of relations in standard normal forms, where first normal is a minimal requirement.

First normal form enforces these criteria:

- Eliminate repeating groups in individual tables.

- Create a separate table for each set of related data.

- Identify each set of related data with a primary key

Examples:

https://www.quora.com/What-is-the-difference-between-NF-2NF-and-3NF

- Atomic means the column only stores one thing. A column called, FullName, which stores the customer’s first & last name is not atomic. You should have 2 atomic columns, one for FirstName, and another for LastName. Columns storing comma-separated values are also non-atomic.

- If you’ve ever seen Phone1, Phone2, and Phone3 in your Customers table (each phone column more sparse than the prior) Congratulations, your Customers table doesn’t even meet first normal form.

Second normal form

https://en.wikipedia.org/wiki/Second_normal_form

A relation that is in first normal form (1NF) must meet additional criteria if it is to qualify for second normal form. Specifically: a relation is in 2NF if it is in 1NF and no non-prime attribute is dependent on any proper subset of any candidate key of the relation. A non-prime attribute of a relation is an attribute that is not a part of any candidate (Composite) key of the relation.

Put simply, a relation is in 2NF if it is in 1NF and every non-prime attribute of the relation is dependent on the whole of every candidate key.

Example:

Suppose your OrderLines table has a Composite Primary Key of OrderID + ProductID. Any other columns in this table that describe Products are only Functionally Dependent on the ProductID, and have nothing to do with the OrderID. These columns are Partially Functionally Dependent on the Primary Key, and should be removed from the OrderLines table and placed in the Products table.

Third normal form

https://en.wikipedia.org/wiki/Third_normal_form#%22Nothing_but_the_key%22

Third normal form (3NF) is a normal form that is used in normalizing a database design to reduce the duplication of data and ensure referential integrity by ensuring that:-

- the entity is in second normal form

- all the attributes in a table are determined only by the candidate keys of that relation and not by any non-prime attributes.

3NF was designed to improve database processing while minimizing storage costs.

An example of a 2NF table that fails to meet the requirements of 3NF is:

| Tournament | Year | Winner | Winner Date of Birth |

|---|---|---|---|

| Indiana Invitational | 1998 | Al Fredrickson | 21 July 1975 |

| Cleveland Open | 1999 | Bob Albertson | 28 September 1968 |

| Des Moines Masters | 1999 | Al Fredrickson | 21 July 1975 |

| Indiana Invitational | 1999 | Chip Masterson | 14 March 1977 |

Because each row in the table needs to tell us who won a particular Tournament in a particular Year, the composite key {Tournament, Year} is a minimal set of attributes guaranteed to uniquely identify a row. That is, {Tournament, Year} is a candidate key for the table.

The breach of 3NF occurs because the non-prime attribute Winner Date of Birth is transitively dependent on the candidate key {Tournament, Year} via the non-prime attribute Winner. The fact that Winner Date of Birth is functionally dependent on Winner makes the table vulnerable to logical inconsistencies, as there is nothing to stop the same person from being shown with different dates of birth on different records.

In order to express the same facts without violating 3NF, it is necessary to split the table into two:

|

|

Update anomalies cannot occur in these tables, because unlike before, Winner is now a primary key in the second table, thus allowing only one value for Date of Birth for each Winner.

Database Normalization

https://en.wikipedia.org/wiki/Database_normalization#Normal_forms

Database normalization is the process of restructuring a relational database in accordance with a series of so-called normal forms in order to reduce data redundancy and improve data integrity. It was first proposed by Edgar F. Codd as an integral part of his relational model.

When an attempt is made to modify (update, insert into, or delete from) a relation, the following undesirable side-effects may arise in relations that have not been sufficiently normalized:

- Update anomaly. The same information can be expressed on multiple rows; therefore updates to the relation may result in logical inconsistencies.

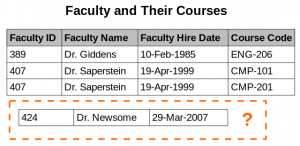

- Insertion anomaly. There are circumstances in which certain facts cannot be recorded at all. For example, each record in a "Faculty and Their Courses" relation might contain a Faculty ID, Faculty Name, Faculty Hire Date, and Course Code. Therefore, we can record the details of any faculty member who teaches at least one course, but we cannot record a newly hired faculty member who has not yet been assigned to teach any courses, except by setting the Course Code to null. This phenomenon is known as an insertion anomaly.

- Deletion anomaly. Under certain circumstances, deletion of data representing certain facts necessitates deletion of data representing completely different facts

Denormalization

Denormalization is a strategy used on a previously-normalized database to increase performance. In computing, denormalization is the process of trying to improve the read performance of a database, at the expense of losing some write performance, by adding redundant copies of data or by grouping data. It is often motivated by performance or scalability in relational database software needing to carry out very large numbers of read operations. Denormalization should not be confused with Unnormalized form. Databases/tables must first be normalized to efficiently denormalize them.

A normalized design will often "store" different but related pieces of information in separate logical tables (called relations). If these relations are stored physically as separate disk files, completing a database query that draws information from several relations (a join operation) can be slow. If many relations are joined, it may be prohibitively slow. There are two strategies for dealing with this. The preferred method is to keep the logical design normalized, but allow the database management system (DBMS) to store additional redundant information on disk to optimise query response. In this case it is the DBMS software's responsibility to ensure that any redundant copies are kept consistent. This method is often implemented in SQL as indexed views (Microsoft SQL Server) or materialised views (Oracle, PostgreSQL). A view may, among other factors, represent information in a format convenient for querying, and the index ensures that queries against the view are optimised physically.

The more common approach is to denormalize the logical data design. With care this can achieve a similar improvement in query response, but at a cost—it is now the database

Bevezető

A Cassandra ugyan úgy mint az RDBMS elkülönített adatbázisokat használ, és azon belül táblákat hozhatunk létre, amiben sorok és oszlopok vannak.

- Keyspace: ez felel meg egy adatbázisnak az RDBMS-ben, vagy egy Index-nek az Elasticsearch-ben

- Column families: egy tábla az adatbázisban, amihez sorok tartoznak. Azonban az oszlopok fajtája soronként eltérhet.

eventually consistent

Telepítés

# docker run --name cassandra -p 9042:9042 -d cassandra

A parancssori Cassandra kliens része a telepítőnek, a bin mappában ül. Ezért indítunk még egy cassandra konténert, és abból fogunk csatlakozni a "cassandra" nevű konténerhez a 9042-es porton.

# docker run -it --rm cassandra /bin/bash

Majd indítsuk el a cqlsh programot: cqlsh host port

root@0ce39e38988a:/# cqlsh 192.168.0.101 9042 Connected to Test Cluster at create keyspace dev [cqlsh 5.0.1 | Cassandra 3.11.3 | CQL spec 3.4.4 | Native protocol v4] Use HELP for help. cqlsh>

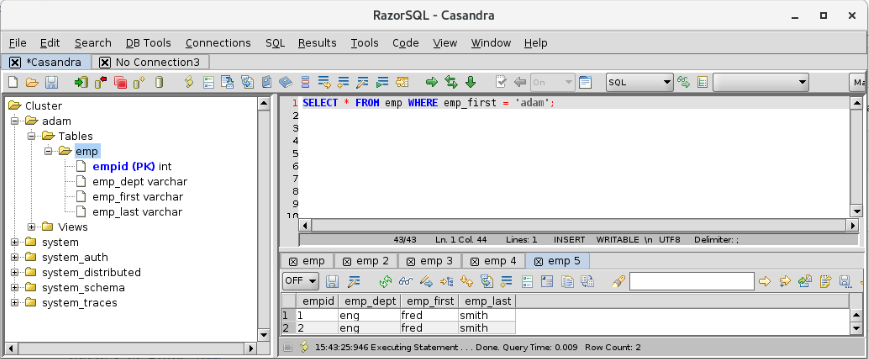

INDEX vs Filter

Hatékonysági okokból alap esetben a Cassandra csak arra az oszlopra enged lekérdezni, amire van index.Csak akkor lehet index nélküli oszlopra hivatkozni a WHERE kifejezésben ha ezt implicit engedélyezzük az ALLOW FILTERING kulcsszóval a lezáró ; előtt. Ugyanis ha egy oszlopon nincs index, akkor a Cassandra az összes sort be fogja olvasni, és filterrel fogja kiválasztani WHERE-ben definiált mezőket. Milliós sorszám esetén ez már problémás lehet.

https://www.datastax.com/2012/01/getting-started-with-cassandra

Lekérdezések

https://www.datastax.com/2012/01/getting-started-with-cassandra

Keyspace és cluster

cqlsh:adam> DESCRIBE KEYSPACE adam;

CREATE KEYSPACE adam WITH replication = {'class': 'SimpleStrategy', 'replication_factor': '1'} AND durable_writes = true;

CREATE TABLE adam.emp (

empid int PRIMARY KEY,

emp_dept text,

emp_first text,

emp_last text

) WITH bloom_filter_fp_chance = 0.01

AND caching = {'keys': 'ALL', 'rows_per_partition': 'NONE'}

AND comment = ''

AND compaction = {'class': 'org.apache.cassandra.db.compaction.SizeTieredCompactionStrategy', 'max_threshold': '32', 'min_threshold': '4'}

...

cqlsh:adam> DESCRIBE TABLE adam.emp;

CREATE TABLE adam.emp (

empid int PRIMARY KEY,

emp_dept text,

...

Időbélyegek

A timestamp és a TTL is egy mezőre vonatkozik, nem az egész sorra. Tehát minden egyes column pair-nek van egy TTL és egy timestamp metadata értéke is.

cqlsh:adam> SELECT first_name, last_name,

... writetime(last_name) FROM user;

first_name | last_name | writetime(last_name)

------------+-----------+----------------------

Mary | Rodriguez | 1538771050876617

Bill | Nguyen | 1538771031333072

Ha a TTL lejár, NULL-ra fogja állítani a mező értékét.

UPDATE user USING TTL 3600 SET last_name = 'McDonald' WHERE first_name = 'Mary' ;

cqlsh:adam> SELECT first_name, last_name, TTL(last_name)

... FROM user WHERE first_name = 'Mary';

first_name | last_name | ttl(last_name)

------------+-----------+----------------

Mary | Rodriguez | 3588

Adatok

Adatbázis létrehozása:

cqlsh> create keyspace adam with replication = {'class':'SimpleStrategy','replication_factor':1};

Tábla létrehozása:

cqlsh> use adam; cqlsh:adam> create table emp (empid int primary key, emp_first varchar, emp_last varchar, emp_dept varchar);

Adat beszúrása:

cqlsh:adam> insert into emp (empid, emp_first, emp_last, emp_dept) values (1,'fred','smith','eng');

Warning

A primary key értékét beszúrás után soha többet nem lehet megváltoztatni, mivel az határozza meg, hogy melyik node-ra kerül a row

Lekérdezés index-ra:

cqlsh:adam> SELECT * FROM adam.emp;

empid | emp_dept | emp_first | emp_last

-------+----------+-----------+----------

1 | eng | fred | smith

2 | eng | fred | smith

Lekérdezés filterrel:

cqlsh:adam> SELECT * FROM adam.emp WHERE emp_last = 'smith' ALLOW FILTERING;

empid | emp_dept | emp_first | emp_last

-------+----------+-----------+----------

1 | eng | fred | smith

2 | eng | fred | smith

Sets

A set esetében mivel egy elem többször is előfordulhat, hatékonyabb mint a lista, ahol egy elem beszúrásához az egész listát végig kell olvasni.

cqlsh:adam> ALTER TABLE user ADD emails set<text>;

cqlsh:adam> UPDATE user SET emails = {

... 'mary@example.com', 'mary2@example.com' } WHERE first_name = 'Mary';

cqlsh:adam> SELECT emails FROM user WHERE first_name = 'Mary';

emails

-------------------------------------------

{'mary2@example.com', 'mary@example.com'}

(1 rows)

List

cqlsh:adam> ALTER TABLE user ADD

... phone_numbers list<text>;

cqlsh:adam> UPDATE user SET phone_numbers = [

... '1-800-999-9999' ] WHERE first_name = 'Mary';

cqlsh:adam> SELECT phone_numbers FROM user WHERE

... first_name = 'Mary';

phone_numbers

--------------------

['1-800-999-9999']

UPDATE user SET phone_numbers[0] = '480-111-1111' WHERE first_name = 'Mary';

Map

cqlsh:adam> ALTER TABLE user ADD

... login_sessions map<timeuuid, int>;

cqlsh:adam> UPDATE user SET login_sessions =

... { now(): 13, now(): 18} WHERE first_name = 'Mary';

cqlsh:adam> SELECT login_sessions FROM user WHERE

... first_name = 'Mary';

login_sessions

--------------------------------------------------------------------------------------

{601a2e20-c8e3-11e8-8684-6d2c86545d91: 13, 601a2e21-c8e3-11e8-8684-6d2c86545d91: 18}

User defined type

cqlsh:adam> CREATE TYPE address (street text, city text, state text, zip_code int);

cqlsh:adam> ALTER TABLE user ADD addresses map<text, frozen<address>>;

cqlsh:adam> UPDATE user SET addresses = addresses + {'home': { street: '7712 E. Broadway', city: 'Tucson',

... state: 'AZ', zip_code: 85715} } WHERE first_name = 'Mary';

cqlsh:adam>

cqlsh:adam> SELECT addresses FROM user WHERE first_name = 'Mary';

addresses

--------------------------------------------------------------------------------------

{'home': {street: '7712 E. Broadway', city: 'Tucson', state: 'AZ', zip_code: 85715}}

Másodlagos INDEX-ek

A másodlagos indexekekkel az a baj, hogy lehet hogy több node-on lesznek szétszorva, mivel az elsődleges index határozza meg hogy melyik node-ra kerül az elem. Mivel több node-on is le kell futtatni a keresést, azért nagyon drága lehet a használata.

Mikor nem szabad másodlagos indexet használni:

- Ha nem túl nagy az elemek kardinalitása. Ha szinte minden elem különbözik egymástól, akkor gyakorlatilag majd az összes node-ot végig kell járni.

- Ha túl kicsi a kardinalitás. Az sem jó ha szinte minden egyes sorban ugyan az az érték van, túl sok lesz a találat.

- Gyakran átírt mezőknél: Ha gyorsabban gyűlnek fel a tombstones-ok mint ahogy azokat a Cassandra fel tudná dolgozni, hibára fog futni az update egy idő után

CREATE INDEX ON user ( last_name );

Note

Másodlagos indexeket a set, list, map elemeire is létrehozhatunk, akár még a user-defined-type belsejében lévő elemekre is, még akkor is ha egy map belsejében annak

CREATE INDEX ON user ( emails );

Cassandara 3.4-től használhatjuk az Apple által kifejlesztet SASI másodlagos index implementációt, ami több funkcionalitást tesz lehetővé mint a beépített változat:

- Kisebb, nagyobb -ra keresés

- LIKE használata string típusú mezők esetén.

Mikor létrehozzuk a másodlagos indexet meg kell mondani, hogy egy custom implementációt szeretnénk használni.

CREATE CUSTOM INDEX user_last_name_sasi_idx ON user (last_name) USING 'org.apache.cassandra.index.sasi.SASIIndex';

Adatbázis GUI

Sokféle grafikus eszközzel csatlakozhatunk a Cassandra adatbázishoz. A legtöbben a RazorSQL-t ajánlották, ami fizetős: https://razorsql.com/